PCIe 3.1 Controller

Home > Interface IP > PCI Express Controller IP > PCIe 3.1 Controller

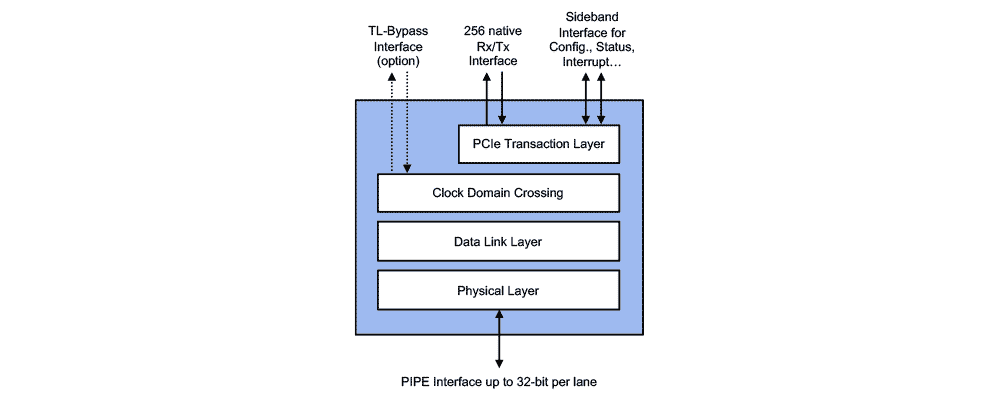

The PCIe 3.1 Controller (formerly XpressRICH) is designed to achieve maximum PCI Express (PCIe) 3.1 performance with great design flexibility and ease of integration. It is fully compatible with the PCIe 3.1/3.0 specification. A PCIe 3.1 Controller with AXI (formerly XpressRICH-AXI) is also available. The controller delivers high-bandwidth and low-latency connectivity for demanding applications in data center, edge and graphics.

How the PCIe 3.1 Controller Works

The PCIe 3.1 Controller is configurable and scalable IP designed for ASIC and FPGA implementation. It supports the PCIe 3.1/3.0 specifications, as well as the PHY Interface for PCI Express (PIPE) specification. The IP can be configured to support endpoint, root port, switch port, and dual-mode topologies, allowing for a variety of use models.

The provided Graphical User Interface (GUI) Wizard allows designers to tailor the IP to their exact requirements, by enabling, disabling, and adjusting a vast array of parameters, including data path size, PIPE interface width, low power support, SR-IOV, ECC, AER, etc. for optimal throughput, latency, size and power.

The PCIe 3.1 Controller is verified using multiple PCIe VIPs and test suites, and is silicon proven in hundreds of designs in production. Rambus integrates and validates the PCIe 3.1 Controller with the customer’s choice of 3rd-party PCIe 3.1 PHY.

Data Center Evolution: The Leap to 64 GT/s Signaling with PCI Express 6.1

The PCI Express® (PCIe®) interface is the critical backbone that moves data at high bandwidth and low latency between various compute nodes such as CPUs, GPUs, FPGAs, and workload-specific accelerators. With the rapid rise in bandwidth demands of advanced workloads such as AI/ML training, PCIe 6.1 jumps signaling to 64 GT/s with some of the biggest changes yet in the standard.

Solution Offerings

PCIe 3.1 Controller

PCI Express layer

- Compliant with the PCI Express 3.1/3.0, and PIPE (16- and 32-bit) specifications

- Compliant with PCI-SIG Single-Root I/O Virtualization (SR-IOV) Specification

- Supports Endpoint, Root-Port, Dual-mode, Switch port configurations

- Supports x16, x8, x4, x2, x1 at 8 GT/s, 5 GT/s, 2.5 GT/s speeds

- Supports AER, ECRC, ECC, MSI, MSI-X, Multi-function, crosslink, and other optional features

- Additional optional features include OBFF, TPH, ARI, LTR, IDO, L1 PM substates, etc.

User Interface layer

- 256-bit transmit/receive low-latency user interface

- User-selectable Transaction/Application Layer clock frequency

- Sideband signaling for PCIe configuration access, internal status monitoring, debug, and more

- Optional Transaction Layer bypass

Unique Features & Capabilities

- Dynamically adjustable application layer frequency down to 8Mhz for increased power savings

- Optional MSI/MSI-X register remapping to memory for reduced gate count when SR-IOV is implemented

- Configurable pipelining enables full speed operation on Intel and Xilinx FPGA, full support for production FPGA designs up to Gen3 x16 with same RTL code

- Ultra-low Transmit and Receive latency (excl. PHY)

- Smart buffer management on receive side (Rx Stream) and transmit side (merged Replay/Transmit buffer) enables lower memory footprint

- Advanced Reliability, Availability, Serviceability (RAS) features include LTSSM timers override, ACK/NAK/Replay/UpdateFC timers override, unscrambled PIPE interface access, error injection on Rx and Tx paths, recovery detailed status and much more, allowing for safe and reliable deployment of IP in mission-critical SoCs

- Optional Transaction Layer bypass allows for customer specific transaction layer and application layer

- Optional QuickBoot mode allows for up to 4x faster link training, cutting system-level simulation time by 20%

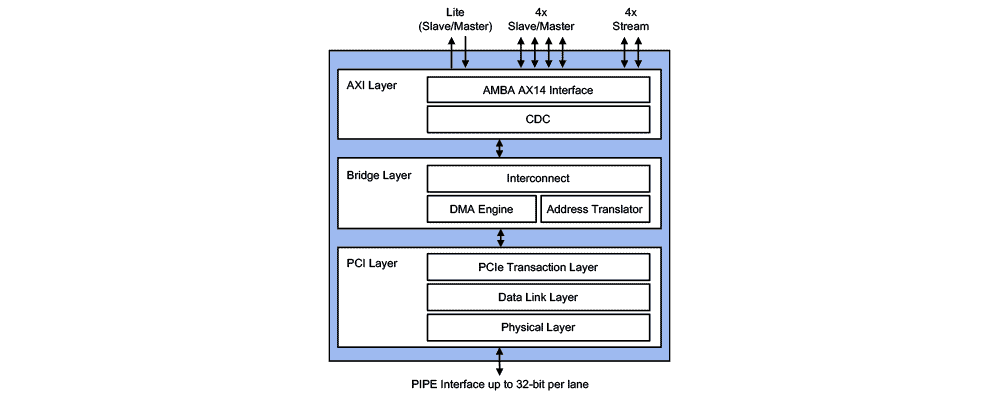

PCIe 3.1 Controller with AXI

PCI Express layer

- Compliant with the PCI Express 3.1/3.0, and PIPE (16- and 32-bit) specifications

- Compliant with PCI-SIG Single-Root I/O Virtualization (SR-IOV) Specification

- Supports Endpoint, Root-Port, Dual-mode configurations

- Supports x16, x8, x4, x2, x1 at 8 GT/s, 5 GT/s, 2.5 GT/s speeds

- Supports AER, ECRC, ECC, MSI, MSI-X, Multi-function, P2P, crosslink, and other optional features

- Supports many ECNs including LTR, L1 PM substates, etc.

AMBA AXI layer

- Compliant with the AMBA® AXI™ Protocol Specification (AXI3, AXI4 and AXI4-Lite) and AMBA® 4 AXI4-Stream Protocol Specification

- Supports multiple, user-selectable AXI interfaces including AXI Master, AXI Slave, AXI Stream

- Each AXI interface data width independently configurable in 256-, 128-, and 64-bit

- Each AXI interface can operate in a separate clock domain

Data engines

- Built-in Legacy DMA engine

- Up to 8 DMA channels, Scatter-Gather, descriptor prefetch

- Completion reordering, interrupt and descriptor reporting

- Optional Address Translation tables for direct PCIe to AXI and AXI to PCIe communication

IP files

- Verilog RTL source code

- Libraries for functional simulation

- Configuration assistant GUI

Documentation

PCI Express Bus Functional Model

- Encrypted Simulation libraries

Software

- PCI Express Windows x64 and Linux x64 device drivers

- PCIe C API

Reference Designs

- Synthesizable Verilog RTL source code

- Simulation environment and test scripts

- Synthesis project & DC constraint files (ASIC)

- Synthesis project & constraint files for supported FPGA hardware platforms (FPGA)

Advanced Design Integration Services:

- Integration of commercial and proprietary PCIe PHY IP

- Development and validation of custom PCIe PCS layer

- Customization of the Controller IP to add customer-specific features

- Generation of custom reference designs

- Generation of custom verification environments

- Design/architecture review and consulting

Data Center Evolution: The Leap to 64 GT/s Signaling with PCI Express 6.0

The PCIe interface is the critical backbone that moves data at high bandwidth and low latency between various compute nodes such as CPUs, GPUs, FPGAs, and workload-specific accelerators. With the torrid rise in bandwidth demands of advanced workloads such as AI/ML training, PCIe 6.0 jumps signaling to 64 GT/s with some of the biggest changes yet in the standard.