Electroiq recently posted an article about Linley’s Cloud Hardware Conference. As the publication explains, the explosive growth in demand for bandwidth and cloud computing capacity poses a new set of challenges and opportunities for the semiconductor supply chain.

For example, says Kushagra Vaid, Microsoft’s GM Hardware Engineering, Cloud & Enterprise, Azure grew by 2X last year, although the company was unable to “pull more performance” out of the existing architecture.

“We are at a junction point where we have to evolve the architecture of the last 20-30 years,” he stated. “We can’t design for a workload so huge and diverse. It’s not clear what part of it runs on any one machine. How do you know what to optimize? Past benchmarks are completely irrelevant.”

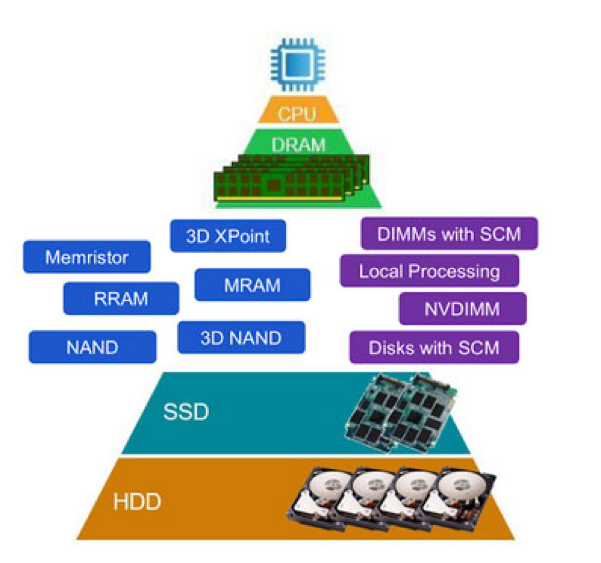

According to Steve Woo, Rambus VP, Systems and Solutions, system architecture is likely to see new types and deployment of memory for higher speeds, along with different levels of non-volatile cache. Indeed, says Woo, the industry has begun implementing new designs and accelerator subsystems that curtail the need to move large amounts of data back and forth over limited pipelines.

“Data is doubling every 2-2.5 years, but DRAM bandwidth is only doubling every 5 years. It’s not keeping up,” he told conference attendees. “We’ll see the addition of more tiers of memory over the next few years.”

The emerging challenge, says Woo, is determining what data to place where using the most appropriate technology, as well as how to move memory closer to the CPU. Racks may become the basic unit instead of servers, so each can be optimized with more memory or additional processors as needed.

To be sure, the continued evolution of HPC (alongside conventional computing) will require system designers to rethink traditional architectures and software, while considering the use of new devices and materials. For example, says Woo, FPGAs are already helping the semiconductor industry shape the computing platforms of the future.

“As we prepare for the Post-Moore Era, system architectures will need to evolve to move forward,” he explained. “Traditional processors coupled with FPGAs, along with technologies to minimize data movement, offer new approaches to improving performance and power efficiency and offer a glimpse of things to come in next-gen systems.”

As Woo emphasizes, the industry is still in the early days of understanding how to use FPGAs in environments like data centers, so the adoption of FPGAs in this market will depend greatly on how much applications can benefit from them.

“As Microsoft has demonstrated with Catapult, there are already plenty of compelling reasons to adopt them for modern workloads,” he added.