We had such a great response to last week’s More CXL Webinar Q&A blog that we decided to reprise the questions answered live in our webinar How CXL Technology will Revolutionize the Data Center (available on-demand). Hope this provides more insights on the capabilities of Compute Express Link™ (CXL™) technology.

Click on a link below to jump to a specific question:

- There have been multiple interconnect standards proposed in the past: CXL, Gen-Z, OpenCAPI and CCIX. Is CXL going to be the one that finally gets adopted?

- What is memory coherence and why is it important?

- Will CXL be a positive or negative driver of overall memory capacity per server going forward given it provides a more efficient means of memory use?

- What are the long-term implications for the RDIMM market given CXL can now be used to attach DRAM to the CPU?

- How is compliance handled in CXL? How do you know if a device is “CXL-compliant”?

- For the first wave of CXL products that introduce memory expansion, what’s the biggest demand from customers…more bandwidth or more capacity?

1. There have been multiple interconnect standards proposed in the past: CXL, Gen-Z, OpenCAPI and CCIX. Is CXL going to be the one that finally gets adopted?

There is wide industry support for CXL, and the market leading platform makers Intel® and AMD®, and all the leading CSPs, are supporting it. CXL has the level of industry support you must achieve if a new technology with as broad a reach as CXL is to be successful. Gen-Z as well as OpenCAPI assets are now combined with CXL. This further strengthens CXL by allowing the best parts of all these standards to be combined over time under the CXL umbrella. Also, CXL adopts the PCI Express® (PCIe®) physical layer, so it benefits from the ubiquitous deployment of PCIe in the data center.

2. What is memory coherence and why is it important?

Coherence means that when two processors share a piece of data, they can be guaranteed that if one processor updates the data, that the other processor will have access to those updates. Hardware guarantees this, and this makes the task of programming much, much easier – helping programmers to develop more complex applications that require cores to share data more easily.

Now in the context of CXL – CXL provides for the same memory coherence mechanisms that are used today with native DRAM. Furthermore, there are some additional hardware coherency support capabilities in later versions of the specification.

3. Will CXL be a positive or negative driver of overall memory capacity per server going forward given it provides a more efficient means of memory use?

In fact, we think CXL will end up increasing memory demand. Fundamentally the reason for CXL to come into existence is the need for more memory. But as you point out it does also allow for more memory efficiency.

New technology to improve efficiency is a natural evolution in the data center. We can look back at a couple of similar situations over the past 20 years and see how they played out.

Back when multi-core CPUs were introduced, there was a concern that fewer CPUs would be sold. But in reality, multi-core CPUs opened up new capabilities that software developers took advantage of to build new applications. In the end, there was more demand for multi-core CPUs, and the market grew.

Virtualization of the server was thought likely to drive a reduction in capex on server equipment, because servers were becoming more efficient. Instead, the market grew as business models like Infrastructure as a Service and Platform as a Service business models thrived.

What we’ve seen over the years is that if you give software developers access to more tools, they create new workloads. Business leaders develop new product offerings. All of this drives a virtuous cycle of greater hardware market growth.

The industry as a whole is just scratching the surface for AI/ML use cases. CXL will enable new memory capacity, bandwidth, and tiering which will drive continued significant investment in memory infrastructure to enable these AI/ML workloads.

So back to efficiency – making memory more efficient is incredibly important because companies and cloud service providers are investing so much in memory to satisfy their insatiable need for more bandwidth and capacity. Capex dollars will continue to be spent on new memory, and CXL will help companies get much greater utility out of that spend.

4. What are the long-term implications for the RDIMM market given CXL can now be used to attach DRAM to the CPU?

Well, the RDIMM market is here to stay. Although CXL provides new options for memory tiering, data center operators will continue to want to have a significant amount of memory as close to the processing cores as possible with the lowest possible latency. RDIMMs address this need very well and will continue to address this tier of the memory hierarchy.

In addition, as we showed during the presentation, there are many use cases for CXL-attached memory modules which use RDIMMs. Cloud Service Providers have long relationships buying RDIMMs, they know how to use them, and they get an easily serviceable module in the data center. Therefore, we believe the market for RDIMMs will increase as a result of CXL.

5. How is compliance handled in CXL? How do you know if a device is “CXL-compliant”?

A: The CXL Consortium is in development of a compliance program very similar to that of the PCI-SIG. We should expect the first CXL 1.1-compliant devices to appear on an integrators list next year (2023).

6. For the first wave of CXL products that introduce memory expansion, what’s the biggest demand from customers…more bandwidth or more capacity?

Great question and one that I get a lot. The simple answer is that it is really workload dependent. But the good news is that CXL can deliver both bandwidth and capacity.

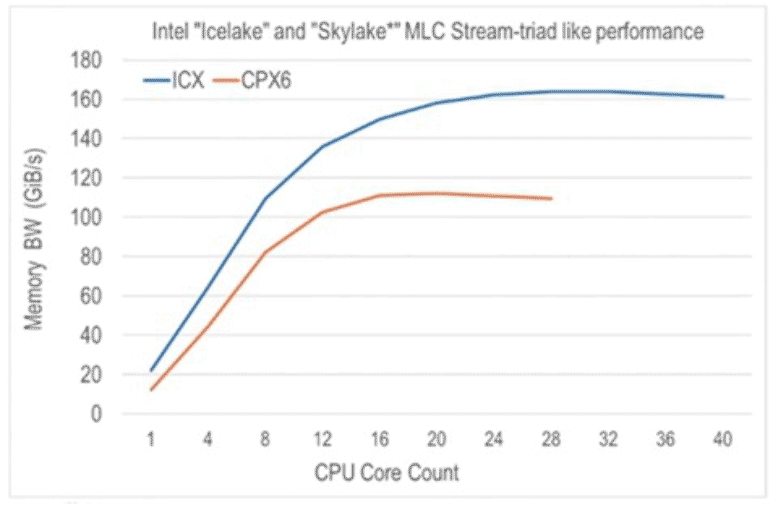

If we go back to that graph (see above) I showed earlier in the webinar – where we hit a wall with the number of cores that we can serve with direct memory channels – that’s first order a bandwidth problem. But as soon as you unlock cores to do more work, generally speaking, those cores are going to require access to more memory capacity – whether it be more hot memory, warm memory, or cold memory. This new capacity and these new memory tiers are also enabled by the same CXL devices that can deliver the additional bandwidth.

There are workloads that are biased either to more bandwidth or to more capacity. For example, web search and content provider use cases would trade additional bandwidth for additional capacity. While in many AI/ML inference models or in memory databases, capacity is extremely important. So, it really is a mix of needs dependent on the workload.

The beauty of CXL is that it provides for a wide variety of memory tiers that can be adopted in the right combination to best serve the needs of a given workload while delivering lowest overall Total Cost of Ownership. When overlayed with software, like that which will come out of initiatives like the recently announced OCP Composable Memory System workgroup, the possibilities are very exciting.

If you have any questions regarding CXL technology or Rambus products, feel free to ask them here.

Leave a Reply