The origins of GDDR

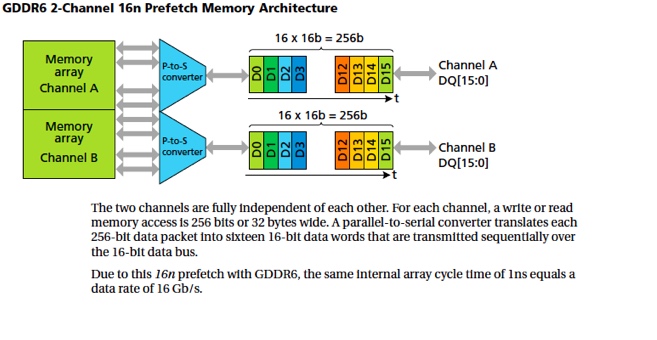

The origins of modern graphics double data rate (GDDR) memory can be traced back to GDDR3 SDRAM. Designed by ATI Technologies, GDDR3 made its first appearance in nVidia’s GeForce FX 5700 Ultra card which debuted in 2004. Offering reduced latency and high bandwidth for GPUs, GDDR3 was followed by GDDR4, GDDR5, GDDR5X and GDDR6, with the latter slated to power a wide range of products in 2018. Supported by Micron Technology, SK Hynix and Samsung, GDDR6 SGRAM will feature a maximum data transfer rate of 16 Gbps, along with an operating voltage of 1.35V.

Image credit: Micron

Although initially targeted at game consoles and PC graphics, the latest iteration of GDDR is expected to be deployed across multiple verticals, with Micron specifically highlighting the data center and automotive sector. Similarly, a recent SK Hynix press release states that GDDR6 is regarded as “one of [the] necessary memory solutions” for a diverse set of use cases, including artificial intelligence, virtual reality, self-driving cars and 4K displays. Meanwhile, Samsung notes that GDDR6 is capable of processing images and video at 16 Gbps with 64GB/s data I/O bandwidth, which is equivalent to transferring approximately 12 full-HD DVDs (5GB equivalent) per second.

Use Case: Automotive

Although current vehicles typically utilize DRAM memory solutions with bandwidths of less than 60GB/s, self-driving cars will require a new generation of memory that supports increased bandwidth. This will enable autonomous vehicles to rapidly execute massive calculations and safely implement real-time decisions on roads and highways. For example, automotive systems in 2020 models are slated to be equipped with x32 LPDRAM components at I/O signaling speeds up to 4266Mb/s.

However, Micron estimates that advanced driver assistance systems (ADAS) applications will ultimately demand 512GB/s – 1024 GB/s bandwidth to support Level 3 and 4 autonomous driving capabilities. As such, the company considers GDDR to be the “best alternative” to solving the reliability and temperature range constraints of the automotive industry.

Use Case: Data Center

Growth in several major areas is clearly driving unprecedented demand for bandwidth and capacity in the data center. These include Internet of Things (IoT) devices such as wearables and smart home appliances, connected vehicles, mobile data and video, as well as cloud services and intelligent analytics. According to Micron, GDDR offers a “huge step” in DRAM performance, making GDDR memory ideal for addressing the demands of data centers and modern networks. More specifically, DDR4 currently tops out at 3.2 Gb/s and GDDR5 at 8Gb/s. In contrast, GDDR6 will hit a maximum speed of 16 Gb/s, providing more than 5X the speed of DDR4.

As IDG’s Agam Shah notes, GDDR6 could be used in network switches and routers, which in some instances are already equipped with GDDR5 memory. In addition, says Shah, GDDR6 will find a place in high-performance computers (HPC) for machine learning. One example of a deep buffer switch that utilizes GDDR memory is Micron’s Interface Masters® Edge Aggregation Switch. This switch system is designed for optical transport, carrier Ethernet, edge switching, data center cloud and enterprise campus market segments.

Interested in learning more about going beyond GPUs with GDDR6? You can download our eBook on the subject below.