[Updated on June 10, 2024] AI training data sets continue to grow and require accelerators that support terabyte-scale bandwidth. Offering a high memory bandwidth and power-efficient solution, HBM3E has become a leading choice for AI training hardware.

Find out why in our blog below.

Table of Contents:

What is HBM3E Memory?

Introduced in January 2022, HBM3 is the latest generation of High Bandwidth Memory (HBM), a high-performance 2.5D/3D memory architecture. Like all previous versions, HBM3 uses a wide data path (1024 bit). Operating at 6.4 Gigabits per Second (Gb/s), HBM3 can deliver a bandwidth of 819 Gigabytes per Second (GB/s), with HBM3E offering an extended data rate to 9.6 Gb/s and the same feature set. Given its outstanding bandwidth, high capacity and compact footprint, it has become the memory solution of choice for advanced AI workloads.

What is a 2.5D/3D Architecture?

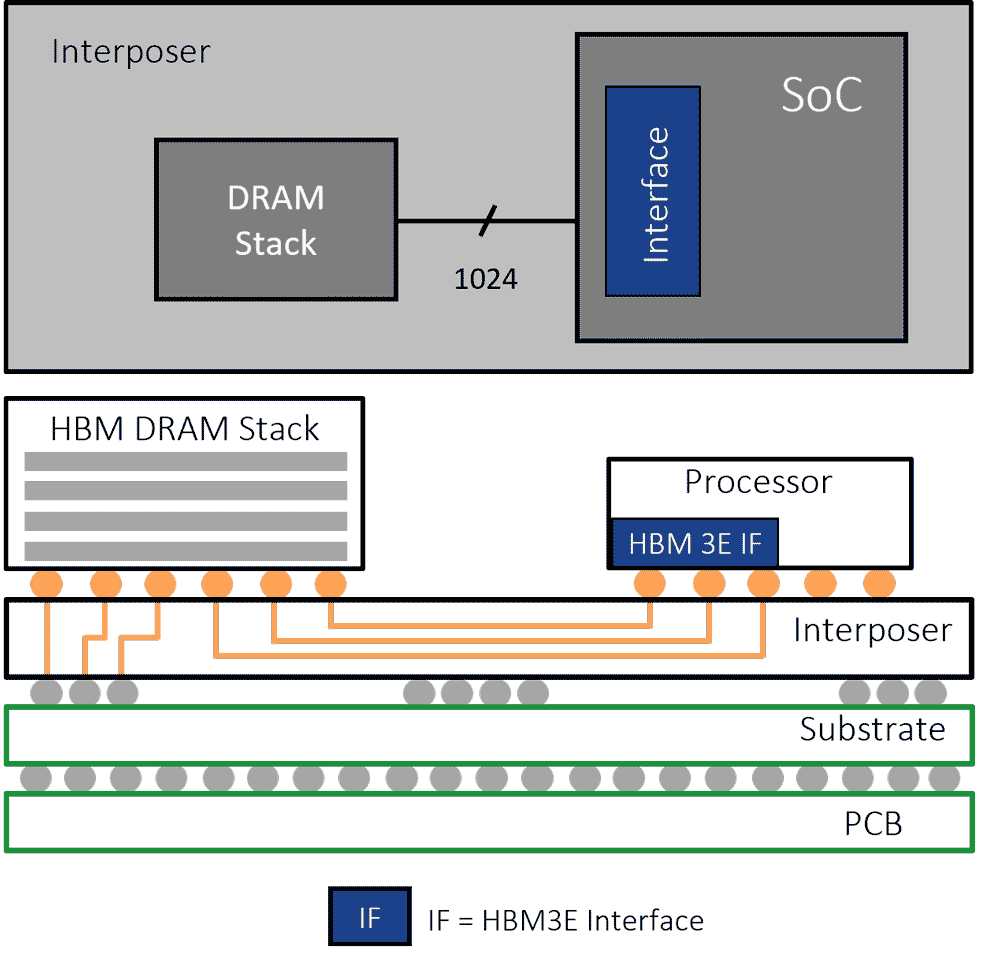

The “3D” part is easy to see. HBM memory is a 3D stack of DRAM in a packaged device. The “2.5D” refers to the way the HBM memory devices connect to the processing chip, be it a GPU or AI accelerator. The data path between each HBM memory device and the processor requires 1024 “wires” or traces. With the addition of command and address, clocks, etc. the number of traces necessary grows to about 1,700.

A thousand plus traces is far more than can be supported on a standard PCB. Therefore, a silicon interposer is used as an intermediary to connect memory device(s) and processor. As with an integrated circuit, finely spaced traces can be etched in the silicon interposer allowing us to achieve the desired number of wires needed for the HBM interface. The HBM device(s) and the processor are mounted atop the interposer in what is referred to as a 2.5D architecture.

How is HBM3E Different from HBM2E, HBM2 or HBM (Gen 1)?

HBM3 represents the third major generation of the HBM standard. With each generation, we’ve seen an upward trend in data rate, 3D-stack height, and DRAM chip density. That translates to higher bandwidth and greater device capacity with each upgrade of the specification.

HBM launched with a 1 Gb/s data rate, and a maximum of 8-high 3D stacks of 16 Gb devices. With HBM3, the data rate scales up to 6.4 Gb/s, and the devices can support 16-high stacks of 32 Gb capacity DRAM. The major DRAM manufacturers have introduced HBM3E devices which push data rates to 9.6 Gb/s.

In parallel with higher density HBM devices, chip architects are driving to higher attach rates in their AI accelerator and GPU designs. A design with six HBM3 devices operating at 6.4 Gb/s delivers 4.9 TB/s of memory bandwidth.

| Generation | Data Rate (Gb/s) | Bandwidth per Device (GB/s) | Stack Height | Max. DRAM Capacity (Gb) | Max. Device Capacity (GB) |

|---|---|---|---|---|---|

| HBM | 1.0 | 128 | 8 | 16 | 16 |

| HBM2 | 2.0 | 256 | 8 | 16 | 16 |

| HBM2E | 3.6 | 461 | 12 | 24 | 36 |

| HBM3 | 6.4 | 819 | 16 | 32 | 64 |

| HBM3E | 9.6 | 1229 | 16 | 32 | 64 |

More Changes with HBM3E/3

But that’s not all. HBM3 also introduces enhancements in power, memory access and RAS over HBM2E.

-

- Power: HBM3E/3 decreases core voltage to 1.1V from HBM2E’s 1.2V. Also, HBM3 reduces IO signaling to 400mV from the 1.2V used in HBM2E. Lower voltages translate to lower power. These changes help offset the higher power consumption inherent in moving to higher data rates.

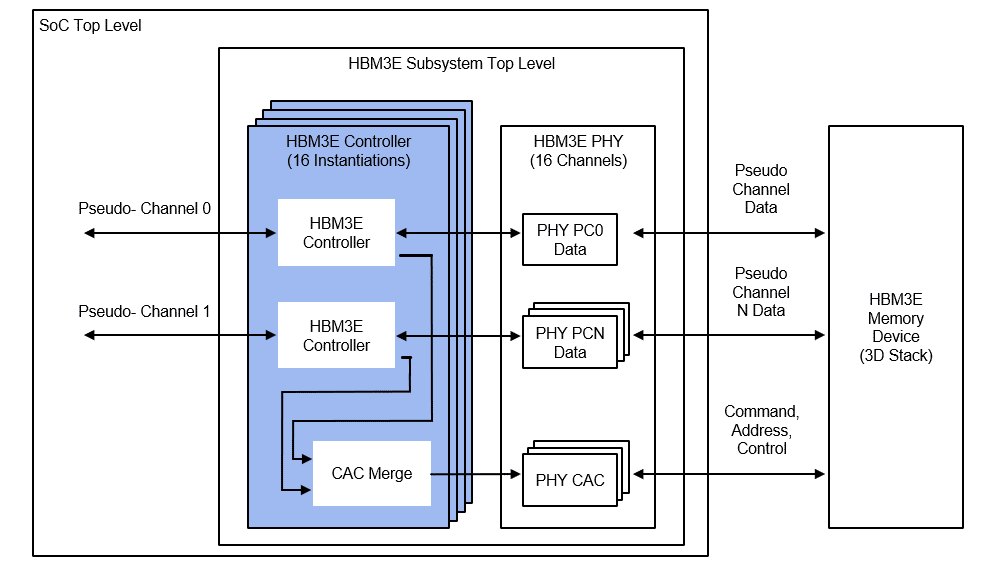

- Channel Architecture: HBM3E/3 divides the 1024-bit wide data channel into 16 64-bit channels or 32 32-bit pseudo-channels. This doubles the number of memory channels, and increases performance, over HBM2E’s eight 128-bit channels and 16 64-bit pseudo channels.

- Reliability, Availability, Serviceability (RAS): HBM3E/3 introduces additional host-side and device-side ECC as well as support for Refresh Management (RFM) and Adaptive Refresh Management (ARFM).

Rambus HBM3E/3 Memory Controller Cores

Optimized for high bandwidth and low latency, the Rambus HBM3E/3 Memory Controller cores deliver maximum performance and flexibility for AI training in a compact form factor and power-efficient envelope.

The Rambus HBM3E/3 Memory Controller cores more than doubles maximum HBM2E signaling speed raising data rates to a market-leading 9.6 Gb/s per data pin (well above the standard speed of 6.4 Gb/s). The interface features 16 independent channels, each containing 64 bits for a total data width of 1024 bits. At maximum data rate, this provides a total interface bandwidth of 1,229 GB/s or 1.23 Terabytes per second (TB/s) of throughput for every attached HBM3E/3 memory device

Summary

Delivering unrivaled memory bandwidth in a compact, high-capacity footprint, has made HBM the memory of choice for AI/ML and other high-performance computing workloads. HBM3, as the latest generation of the standard, raises data rates to 6.4 Gb/s and promises to scale even higher. The Rambus HBM3E/3 Controller cores provide industry-leading support of the extended roadmap for HBM3 with performance to 9.6 Gb/s. With this solution, designers can achieve as much as 1.23 TB/s of throughput for every attached HBM3E/3 memory device.

Leave a Reply