Written by Steven Woo

Introduction

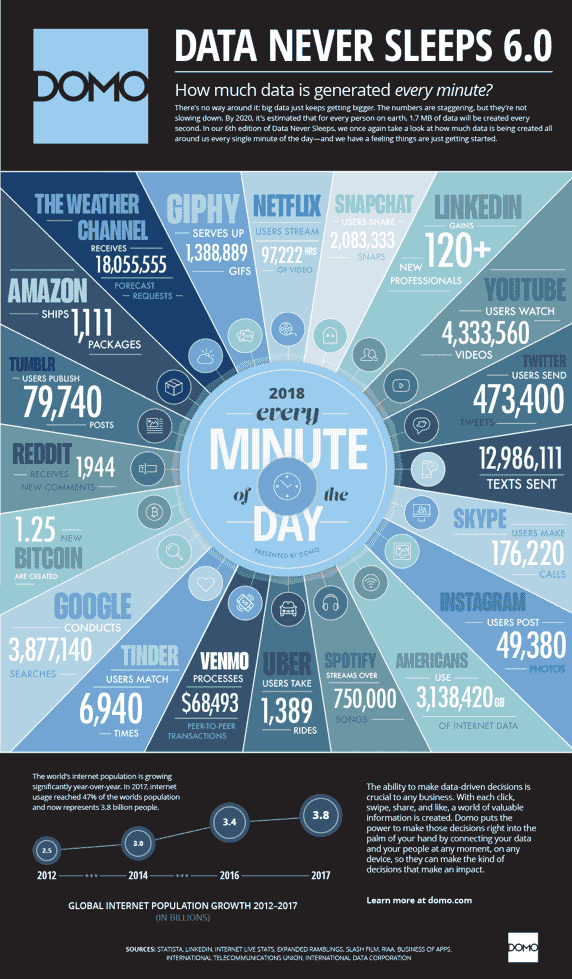

The ongoing transition from 4G to 5G is driving major infrastructure upgrades that include the integration of AI and machine learning capabilities at the edge. This is due to several major factors, the most important of which is the relentless growth in the amount of the world’s digital data. According to a recent Forbes article, approximately 2.5 quintillion bytes of data are created each day. By 2020, DOMO estimates that for every person on earth, 1.7 MB of data will be created every second.

Beyond the incredible rate of global data growth, carriers see 5G as a lucrative opportunity to generate new revenue streams and bolster the average revenue per user (ARPU). Neural networks and machine learning will continue playing prominent roles in supporting a range of low-latency, bandwidth-intensive applications at the edge including augmented reality, virtual reality, the IoT and Industry 4.0. As such, companies like Nvidia, Google, Intel and ARM are all shipping AI-optimized edge computing platforms.

Offloading Data Processing to Edge Nodes

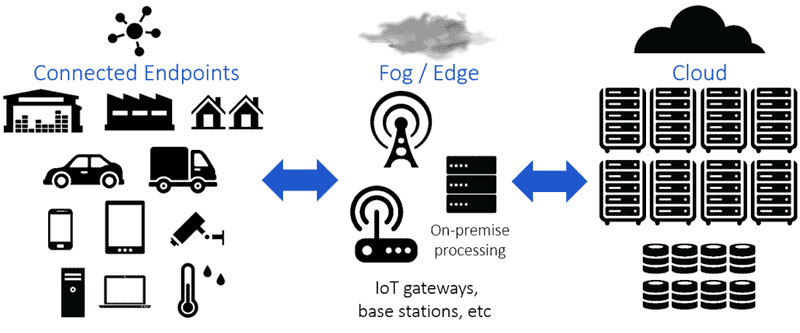

The rapid growth of the world’s digital data, which will accelerate with the ongoing transition from 4G to 5G, is only increasing the already considerable strain placed on current network infrastructure. In addition to the services illustrated in the image above, IoT endpoints such as cameras, sensors, meters, and connected cars, are generating a significant amount of data today that is often transported to data centers for processing. Offloading certain types of data processing to edge nodes helps reduce transaction latencies, improve throughputs and takes the pressure off long-haul links that move data to more centralized data centers by sending along a smaller amount of higher-value data processed in these edge nodes.

Processing closer to data / endpoints improves latency bandwidth, energy use

Edge computing also improves energy consumption by minimizing data movement. Moreover, processing at edge nodes improves security, as data resides on infrastructure that is not as widely shared compared to more geographically centralized data centers.

Google’s Edge TPU & Nvidia’s Jetson

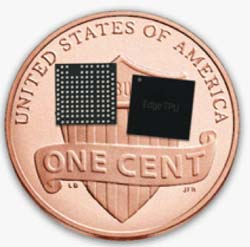

As we noted above, a number of companies are designing and shipping specialized silicon for AI at the edge. For example, Google’s Edge TPU leverages the company’s extensive data center TPU work, allowing AI models trained in data centers to run at the edge. The Edge TPU, a purpose-built ASIC designed to achieve high performance at low power and in a small footprint, includes an open-source TensorFlow Lite programming environment.

Deployed in this manner, the Edge TPU is intended to complement the data center TPU and associated services to form a complete, end-to-end AI solution with both hardware and software components.

Current iterations of Google’s Edge TPU include a Coral dev board and Coral accelerator. The Coral dev board – which runs Mendel Linux – is a single-board computer with a removable system-on-module (SOM) featuring the Edge TPU. Additional key specs include an NXP i.MX 8M SOC (quad Cortex-A53, Cortex-M4F), integrated GC7000 Lite Graphics, ML accelerator Google Edge TPU coprocessor, 1 GB LPDDR4 and 8 GB eMMC.

Meanwhile, Nvidia targets the edge with the Jetson TX2, a 7.5-watt supercomputer on a module that is built around the company’s Pascal-family GPU. The Jetson TX2 packs 8 GB of memory and 59.7 GB/s of memory bandwidth. The Jetson TX2i variant is aimed at high-performance edge computing devices such as industrial robots, machine vision cameras and portable medical equipment.

Conclusion

The industry transition from 4G to 5G is generating an explosion of data and serving as a catalyst for the integration of neural networks and machine learning capabilities at the edge. Offloading certain types of data processing to edge nodes minimizes transaction latencies, improves throughput, reduces energy consumption, bolsters security and takes the pressure off long-haul links.

Interested in learning more about AI and machine learning? You can check out our article archive here.

Leave a Reply