Written by Steven Woo for Rambus Press

There has been quite a lot of recent news about domain-specific processors that are being designed for the artificial intelligence (AI) market. Interestingly, many of the techniques used today in modern AI chips and applications have actually been around for several decades. However, neural networks didn’t really take off during the last wave of interest in AI that spanned the 1980s and 1990s. The question is why.

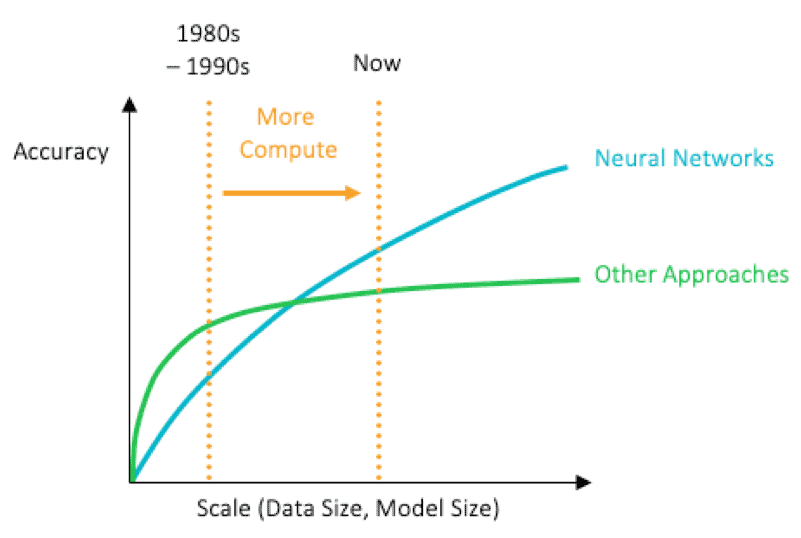

The chart above provides some insight as to why AI technology remained relatively static for so many years. Back in the 1980s and 1990s, processors (CPUs) simply weren’t fast enough to adequately handle AI applications. In addition, memory performance wasn’t yet good enough to enable neural networks and modern techniques to displace conventional approaches. Consequently, conventional approaches remained popular in the above-mentioned timeframe.

However, if you fast forward a few decades to today, Moore’s Law has provided us with approximately five orders of magnitude better processing power and about three to four orders of magnitude better memory performance and capacity. These advances have enabled specialized neural network processors to outperform the conventional approaches. As such, neural networks have become the method of choice for a wide range of AI applications.

The timing is indeed fortuitous, as even by the most conservative estimates the world’s digital data is doubling about every two to three years. There’s so much digital data in the world that AI is almost the only way to make sense of it all in a timely fashion. So, there is a dependence of sorts that is forming. The abundance of digital data helps us train our neural networks and make them even better. In turn, AI is rapidly becoming one of the only ways to rapidly process and interpret the data. This trend will continue well into the future, as the current wave of AI activity is just beginning. There is so much more left to do.

For example, many AI developers want to create more sophisticated algorithms, larger datasets and other methods and applications. The challenge? Everyone wants more performance. However, the semiconductor industry can no longer fully rely on two important tools – Moore’s Law and Dennard (power) scaling – as it has for the past several decades. Moore’s Law is slowing, while Dennard Scaling broke down around 2007. Nevertheless, the explosion of data and advent of new AI applications are challenging the semiconductor industry to provide better performance and better power efficiency.

In this “Memory Systems for AI” blog series, we’ll describe how AI is driving a fascinating renaissance in computer architecture by serving as a catalyst for the design of new domain-specific silicon. It’s an extremely exciting time to be in the semiconductor industry because of the resurgence of interest in new AI architectures. And since memory bandwidth is a critical resource for AI applications, memory systems have once again become a hot topic within the semiconductor industry and beyond.

Leave a Reply