The AI boom is giving rise to profound changes in the data center; compute-intensive workloads are driving an unprecedented demand for low latency, high-bandwidth connectivity between CPUs, accelerators and storage. The Compute Express Link® (CXL®) interconnect offers new ways for data centers to enhance performance and efficiency.

As data centers grapple with increasingly complex AI workloads, the need for efficient communication between various components becomes paramount. CXL addresses this need by providing low-latency, high bandwidth connections that can improve overall memory and system performance.

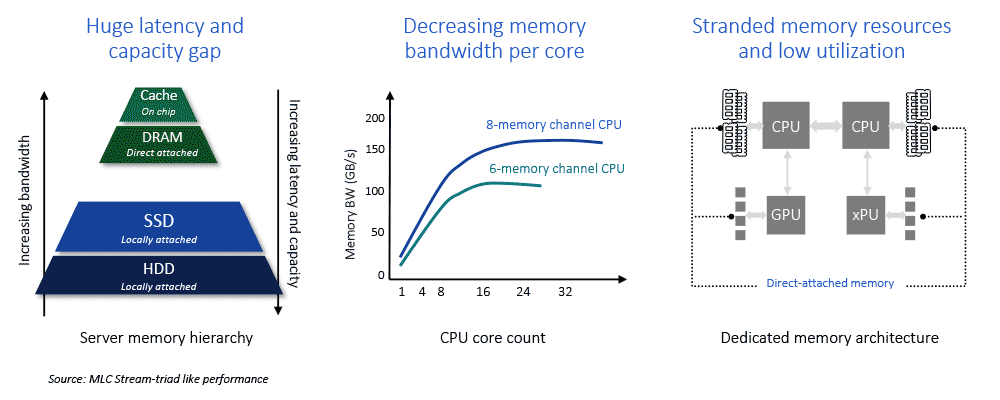

CXL 3.1 takes data rates up to 64 GT/s and offers multi-tiered (fabric-attached) switching to allow for highly scalable memory pooling and sharing. These features will be key in the next generation of data centers to mitigate high memory costs and stranded memory resources while delivering increased memory bandwidth and capacity when needed.

“The performance demands of Generative AI and other advanced workloads require new architectural solutions enabled by CXL,” said Neeraj Paliwal, general manager of Silicon IP at Rambus. “The Rambus CXL 3.1 digital controller IP extends our leadership in this key technology delivering the throughput, scalability and security of the latest evolution of the CXL standard for our customers’ cutting-edge chip designs.”

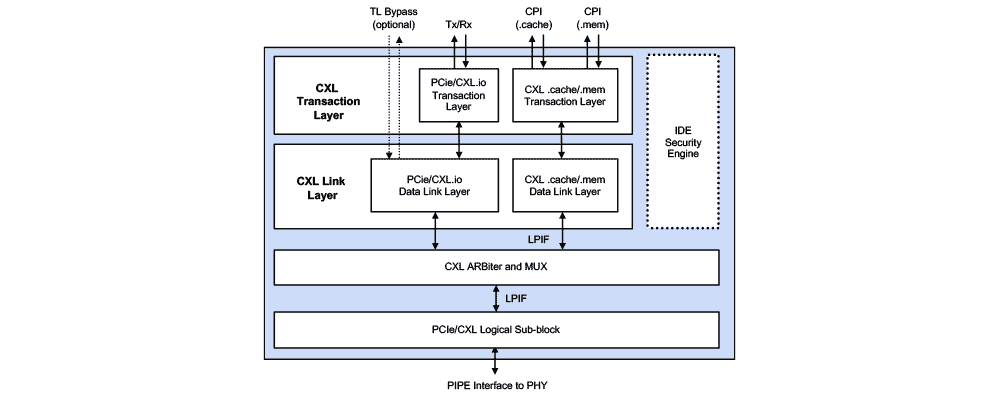

The Rambus CXL 3.1 Controller IP is a flexible design suitable for both ASIC and FPGA implementations. It uses the Rambus PCIe® 6.1 Controller architecture for the CXL.io protocol, and it adds the CXL.cache and CXL.mem protocols specific to CXL. The built-in, zero-latency integrity and data encryption (IDE) module delivers state-of-the-art security against physical attacks on the CXL and PCIe links. The controller can be delivered standalone or integrated with the customer’s choice of CXL 3.1/PCIe 6.1 PHY.

CXL is a key interconnect for data centers and addresses many of the challenges posed by data-intensive workloads. Join Lou Ternullo at our upcoming webinar “Unlocking the Potential of CXL 3.1 and PCIe 6.1 for Next-Generation Data Centers” to learn how CXL and PCIe interconnects can help designers optimize their data center memory infrastructure solutions.

Leave a Reply