Home > Emerging Solutions > Hybrid Memory Solutions

Emerging Solutions

Hybrid Memory Research

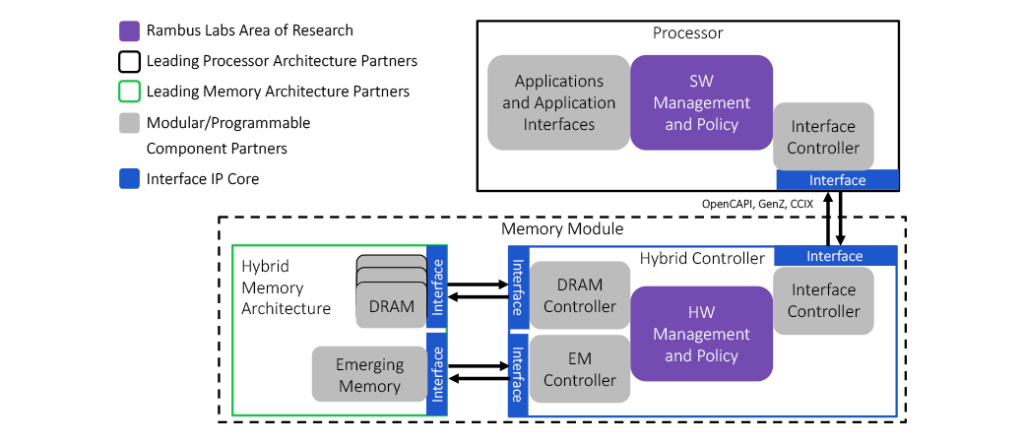

A research program, in partnership with IBM, to explore the use of DRAM with emerging memories (EM) to create a high-capacity memory subsystem that delivers comparable performance to DRAM.

Flexible Interface

Memory and processor agnostic by using advanced interfaces like OpenCAPI, GenZ, and CCIX

Low Latency

Researching options for hybrid architectures that perform at the same or similar speeds as current DRAM solutions

Increased Capacity

Exploring emerging memory technology options to offer significantly higher capacities

A Growing Requirement for Increased Memory Capacities

Leading-edge technologies, such as big data analytics and deep learning (a specific subset of artificial intelligence), are creating an increasing demand for memory capacity and performance inside the data center. For example, deep learning techniques that leverage neural networks are being used in autonomous driving applications for voice or facial recognition and are required to analyze and compute large data sets at incredible speeds. Due to this, the memory bandwidth and capacity in the system directly define the run time of a learning algorithm and are often the limiting factor for the system’s performance.

In addition to these emerging technologies, there are also many current applications that could benefit from a cost-effective way to significantly increase memory capacity. Examples include in-memory databases (IMDB) for faster decision making, media streaming services that could load full movies into memory, large graphic rendering projects (animated movie or game creation) that constantly require terabytes of data to be loaded into memory at a time, and more. Credit card fraud detection is a great example use case that could benefit from large-capacity IMBDs. The bank behind a credit card is required to approve or reject a transaction from any user at any given moment in time, which requires them to quickly access large amounts of information every transaction. Having decision making information directly available in-memory (versus disk storage) will allow the bank to make quicker, more accurate decisions and could help prevent fraud from occurring.

As seen in the examples above, technology continues advancing and data generation from edge devices is exponentially increasing. Therefore, the requirement for instantaneous calculations is higher than ever, and projects like our hybrid memory research are solving key limitations that will continue advancing hardware and reduce bottlenecks in the data center.

The CryptoMedia Security Platform is a complete content storage solution and includes a hardware root-of-trust, player software and trusted key provisioning services to meet the growing demand for high-quality digital movie watching experiences of 4K Ultra-high Definition (UHD) and High Dynamic Range (HDR) content. CryptoMedia solutions deliver a secure digital ownership experience for premium content across multiple devices, including smartphones, tablets, smartTVs and home storage devices.

- The Player Core that provides protection for stored media while still providing ease of use for the consumer in accessing their content through VIDITY-compliant player devices;

- The Player Agent that is available standalone or integrated with the Key Derivation Core for secure acquisition and playback of VIDITY content; and

- The Key Issuance Center (KIC) that provides keys to player and storage device manufacturers, as well as content providers in the VIDITY ecosystem.

- DPA Resistant Solutions, including cryptographic hardware cores and software libraries, that provide protection against side channel attacks

Architecting a Hardware-Managed Hybrid DIMM Optimized for Cost/Performance

Rapidly evolving workloads and exploding data volumes place great pressure on data-center compute, IO, and memory performance, and especially on memory capacity. Increasing memory capacity requires a commensurate reduction in memory cost per bit. DRAM technology scaling has been steadily delivering affordable capacity increases, but DRAM scaling is rapidly reaching physical limits. Other technologies such as Flash, enhanced Flash, Phase Change Memory, and Spin Torque Transfer Magnetic RAM hold promise for creating high capacity memories at lower cost per bit. However, these technologies have attributes that require careful management.

Feed the Starving CPUs

Moore’s Law has allowed computing gains through clock speeds, increasing the number of cores, and supplemental computing through the use of accelerators, but DRAM has not kept pace and memory is the growing bottleneck in the data center. Although DRAM is currently the memory of choice for low latencies, its capacity limitations are creating a surplus of computing power. The standard way to increase capacity is through increased memory modules or increased memory channels, but this comes at a cost through dollars, area, and power. Rambus is continuously working to feed the point of computing through products like our Server DIMM Chipsets for registered and load-reduced memory modules (RDIMMs and LRDIMMs), which enable increased DRAM capacities while maintaining maximum performance. Although RDIMM and LRDIMM options are a much needed stop-gap, we understand that the divergence between processing power and memory is growing and other ideas or architectures must be considered. Rambus, as a leader in memory interface and architecture design, continues to research and innovate in this field looking for possible ways to feed data starved CPUs by reducing the latency gap between DRAM and other, higher capacity, technologies.