CXL Glossary

A glossary of CXL IP terminology and relevant solutions.

A

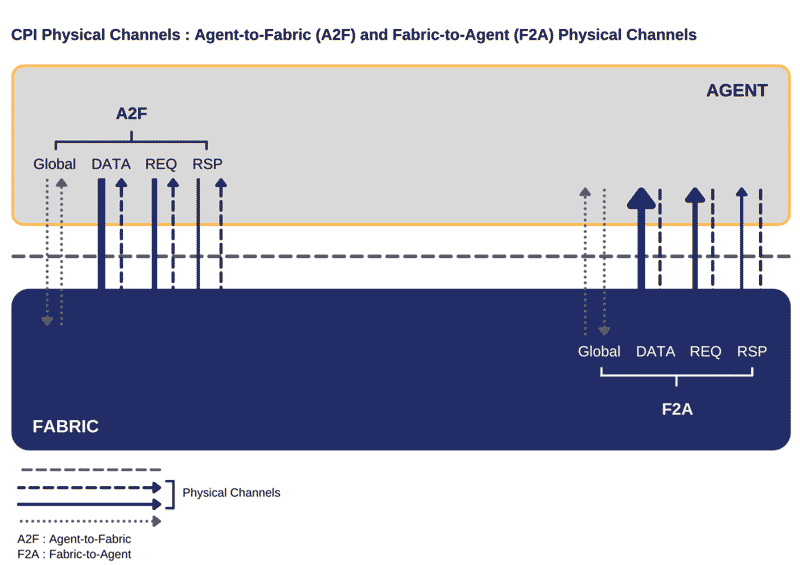

A2F

An interface for coupling an A2F (Agent-to-Fabric) supports a set of coherent interconnect protocols and includes a global channel to communicate control signals to support the interface, a request channel to communicate messages associated with requests to other agents on the fabric, a response channel to communicate responses to other agents on the fabric, and a data channel to couple to communicate messages associated with data transfers to other agents on the fabric, where the data transfers include payload data.

- For upstream ports, A2F corresponds to H2D from CXL and F2A corresponds to D2H from CXL

- For downstream ports, A2F corresponds to D2H and F2A corresponds to H2D

- For upstream ports, A2F corresponds to M2S RwD from CXL and F2A corresponds to S2M DRS from CXL

- For downstream ports, A2F corresponds to S2M DRS and F2A corresponds to M2S RwD

Devices that may be used by software running on Host processors to offload or perform any type of compute or I/O task. Examples of accelerators include programmable agents (such as GPU/GPCPU), fixed function agents, or reconfigurable agents such as FPGAs.

Ack

An Ack (Acknowledgment) code is a signal sent to confirm receipt or understanding messages, data, by sending a coded reply.

ACL

ACL (Access Control List) is a list of permissions that specifies the access rights of each user to a particular system object and the operations allowed to be performed on the object. Each object has a security attribute that identifies its ACL.

ACPI

The ACPI (Advanced Configuration and Power Interface) provides an open standard that operating systems can use to discover and configure how a computer’s basic input/output system, operating system, peripheral devices communicate with each other about perform power management.

ADD

The AAD (Additional Authenticated Data) is an input to an Authenticated Encryption operation. Data that is integrity protected but not encrypted. Additional authenticated data (AAD) is any string that you pass to Cloud Key Management Service as part of an encrypt or decrypt request. AAD is used as an integrity check and can help protect your data from a confused deputy attack.

Agent

Agent refers to the SoC IP that connects to the fabric. The agent is on one side, and the fabric is on the other of the CPI interface.

ALMP

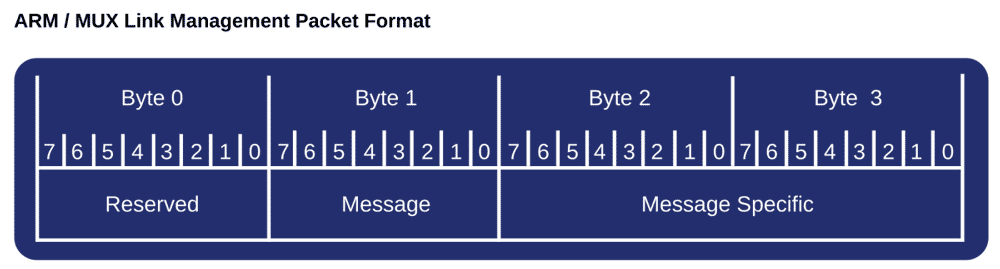

The ARB/MUX uses ALMPs to communicate virtual link state transition requests and responses associated with each link layer to the remote ARB/MUX.

An ALMP (ARB/MUX Link Management Packet) is a 1DW packet with format shown in the picture below. This 1DW packet is replicated four times on the lower 16-bytes of a 528-bit flit to provide data integrity protection; the flit is zero padded on the upper bits. If the ARB/MUX detects an error in the ALMP, it initiates a retrain of the link.

The message code used in Byte 1 of the ALMP is 0000_1000b. ALMPs can be request or status type. The local ARB/MUX initiates transition of a remote vLSM using a request ALMP. After receiving a request ALMP, the local ARB/MUX processes the transition request and returns a status ALMP to indicate that the transition has occurred. If the transition request is not accepted, no status ALMP is sent and both local and remote vLSMs remain in their current state

C

CDAT

CDAT (Coherent Device Attribute Table) is a table describing performance characteristics of a CXL device or a CXL switch.

It’s a data structure that is exposed by a coherent component and describes the performance characteristics of these components. The types of components include coherent memory devices, coherent accelerators, and coherent switches. For example, CXL accelerators may expose its performance characteristics via CDAT. CDAT describes the properties of the coherent component and is not a function of the system configuration.

CLFlush

The CLFLush is a request to the Host to invalidate the cache-line specified in the address field. The typical response is GO-I that will be sent from the Host upon completion in memory.

However, the Host may keep tracking the cache line in Shared state if the Core has issued a Monitor to an address belonging in the cache line.

Thus, the Device must not rely on CLFlush/GO-I as an only and sufficient condition to flip a cache line from Host to Device bias mode.

Instead, the Device must initiate RdOwnNoData and receive an H2D Response of GO-E before it updates its Bias Table and may subsequently access the cache-line without notifying the Host. Under error conditions, a ClFlush request may receive the line in the Error (GO-Err) state. The device is responsible for handling the error appropriately.

CPI

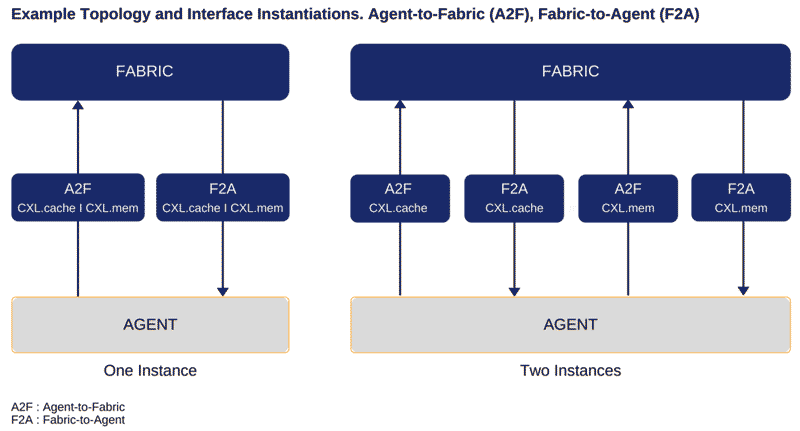

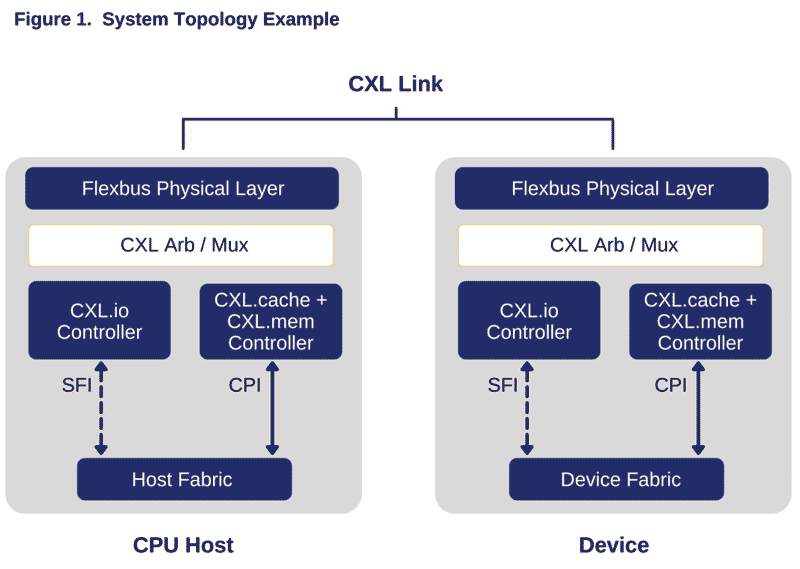

CPI allows mapping of different protocols on the same physical wires.

For example, mapping of the CXL.cache and CXL.mem protocols to CPI. Depending on whether the component is a Downstream port or an Upstream port, different channels of CXL.cache and CXL.mem become relevant for the agent-to-fabric (A2F) direction or the fabric-to-agent (F2A) direction. Figure 1 shows an example topology between a Host CPU and a Device.

The specific choices of channel mapping and physical wire sharing between different protocols are implementation-specific and are allowed by this specification.

CXL IDE

CXL IDE (CXL Integrity and Data Encryption) define mechanisms for providing Confidentiality, Integrity and Replay protection for data transiting the CXL link. The cryptographic schemes are aligned with the current industry best practices. It supports a variety of usage models while providing for broad interoperability. CXL IDE can be used to secure traffic within a Trusted Execution Environment (TEE) that is composed of multiple components, however, the framework for such a composition is out of scope for this specification.

CXL.cache

CXL.cache is an agent coherency protocol that supports device caching of Host memory.

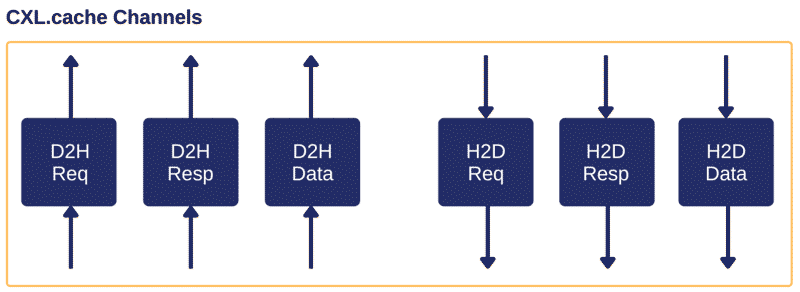

Overview The CXL.cache protocol defines the interactions between the Device and Host as a number of requests that each have at least one associated response message and sometimes a data transfer. The interface consists of three channels in each direction: Request, Response, and Data. The channels are named for their direction, D2H for Device to Host and H2D for Host to Device, and the transactions they carry, Request, Response and Data. The independent channels allow different kinds of messages to use dedicated wires and achieve both decoupling and a higher effective throughput per wire.

CXL.io

PCIe-based non coherent I/O protocol with enhancements for accelerator support.

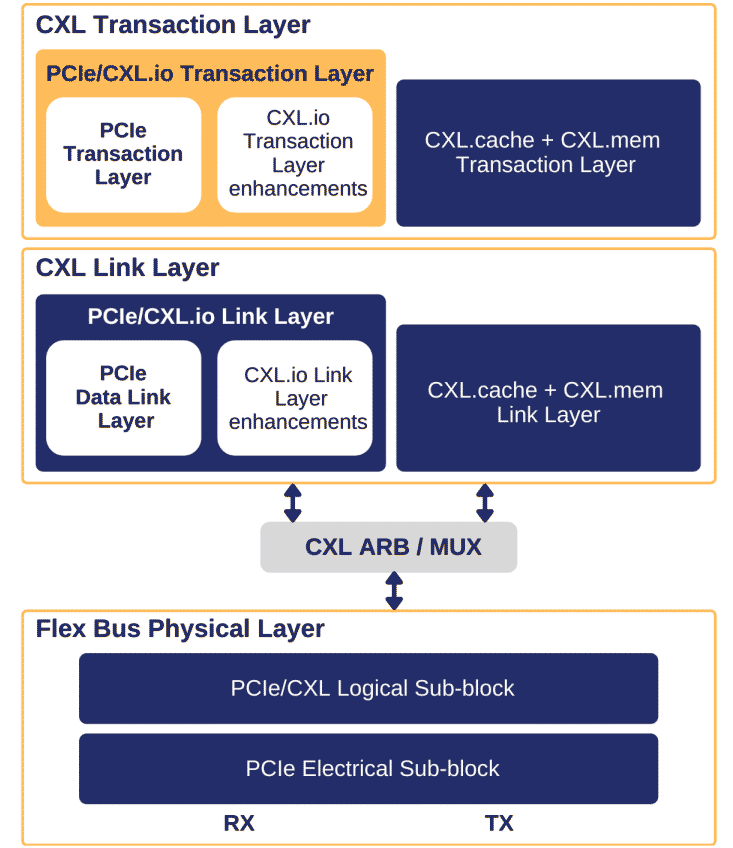

CXL.io provides a non-coherent load/store interface for I/O devices. The Figure below shows where the CXL.io transaction layer exists in the Flex Bus layered hierarchy. Transaction types, transaction packet formatting, credit-based flow control, virtual channel management, and transaction ordering rules follow the PCIe definition.

CXL.io Endpoint

A CXL Device is required to support operating in both CXL 1.1 and CXL 2.0 modes. The CXL Alternate Protocol negotiation determines the mode of operation. When the link is configured to operate in CXL 1.1 mode a CXL.io endpoint must be exposed to software as a PCIe RCiEP, and when configured to operate in CXL 2.0 mode must be exposed to software as PCI Express Endpoint.

A CXL.io endpoint function that participates in CXL protocol must not generate INTx messages. Non-CXL Function Map DVSEC enumerates functions that do not participate in CXL.cache or CXL.mem. Even though not recommended, these nonCXL functions are permitted to generate INTx messages. CXL.io endpoints functions of MLD component, including non-CXL functions are not permitted to generate INTx messages.

CXL.io Link Layer

The CXL.io link layer acts as an intermediate stage between the CXL.io transaction layer and the Flex Bus Physical layer. Its primary responsibility is to provide a reliable mechanism for exchanging transaction layer packets (TLPs) between two components on the link. The PCIe Data Link Layer is utilized as the link layer for CXL.io Link layer.

CXL.mem

CXL.mem is a memory access protocol that supports device-attached memory. The CXL Memory Protocol is called CXL.mem, and it is a transactional interface between the CPU and Memory. It uses the phy and link layer of Compute Express Link (CXL) when communicating across dies. The protocol can be used for multiple different Memory attach options including when the Memory Controller is located in the Host CPU, when the Memory Controller is within an Accelerator device, or when the Memory Controller is moved to a memory buffer chip. It applies to different Memory types (volatile, persistent, etc.) and configurations (flat, hierarchical, etc.) as well.

D

D2H

In the CXL communication protocol, in order to support address space isolation, security measures, and authentication of translated Host Physical Address (HPAs) for CXL.Cache communications, Device to Host (D2H) request accesses may go through a TOC history lookup on a downstream port. Such ToC history may also be referred to herein as context, and which may be communicated in so-called context slots.

DCOH

DCOH refers to the Device Coherency agent on the device that is responsible for resolving coherency with respect to device caches and managing Bias states.

DMTF

DMTF (Distributed Management Task Force) creates open manageability standards spanning diverse emerging and traditional IT infrastructures including cloud, virtualization, network, servers and storage. Member companies and alliance partners worldwide collaborate on standards to improve the interoperable management of information technologies.

Nationally and internationally recognized by ANSI and ISO, DMTF standards enable a more integrated and cost-effective approach to management through interoperable solutions. Simultaneous development of Open Source and Open Standards is made possible by DMTF, which has the support, tools, and infrastructure for efficient development and collaboration.

Based in Portland, Oregon, the DMTF is led by a board of directors representing technology companies including: Broadcom Inc., Cisco, Dell Technologies, Hewlett Packard Enterprise, Hitachi, Ltd., HP Inc., Intel Corporation, Lenovo, and NetApp.

E

EOP

EOP (End of Packet) is a control character indicating the end of a packet. The end of each packet is signaled by the sending agent by driving both differential data lines low for two bit times followed by an idle for one bit time. The agent receiving the packet recognizes the EOP when it detects the differential data lines both low for greater than one bit time.

F

Flex Bus

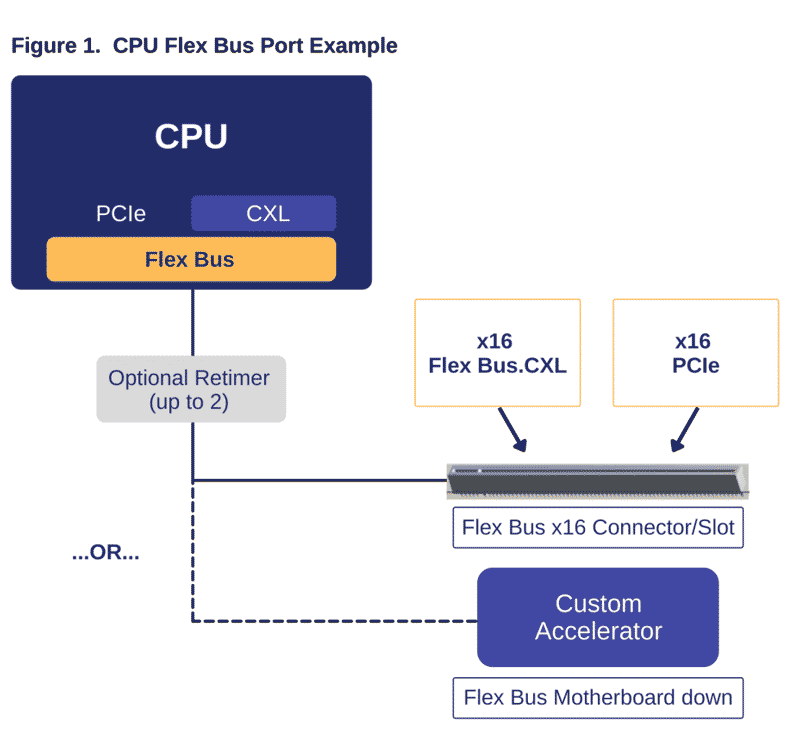

A Flex Bus port allows designs to choose between providing native PCIe protocol or CXL over a high-bandwidth, off-package link; the selection happens during link training via alternate protocol negotiation and depends on the device that is plugged into the slot. Flex Bus uses PCIe electricals, making it compatible with PCIe retimers, and form factors that support PCIe.

Figure 1 provides a high-level diagram of a Flex Bus port implementation, illustrating both a slot implementation and a custom implementation where the device is soldered down on the motherboard. The slot implementation can accommodate either a Flex Bus.CXL card or a PCIe card. One or two optional retimers can be inserted between the CPU and the device to extend the channel length.

FLIT

FLIT (Flow Control UnIT) describes messages sent across CPI that generally express the amount of data passed on in one clock cycle on a CPI physical channel. On some physical channels, messages can consist of more than one FLIT. The term Pump has a similar meaning in the context of CPI and is a term more commonly used in fabrics to refer to data messages sent as multiple FLITs (or pumps).

FLR

The PCIe FLR (Function Level Reset) mechanism enables software to quiesce and reset Endpoint hardware with Function-level granularity. CXL devices expose one or more PCIe functions to host software. These functions can expose FLR capability and existing PCIe compatible software can issue FLR to these functions. The PCIe specification Base Specification provides specific guidelines on impact of FLR on PCIe function level state and control registers. For compatibility with existing PCIe software, CXL PCIe functions should follow those guidelines if they support FLR. For example, any software readable state that potentially includes secret information associated with any preceding use of the Function must be cleared by FLR.

FM

The FM (Fabric Manager) is an entity separate from the Switch or Host firmware that controls aspects of the system related to binding and management of pooled ports and devices.

H

HBM

High bandwidth memory (HBM) is a memory interface used in 3D stacked SDRAM (synchronous dynamic random access memory). It provides wide channels for data to shorten the information path while increasing energy efficiency and decreasing power consumption. Samsung and SK Hynix produce HBM chips.

HDM

HDM means Host-managed Device Memory. Device-attached memory mapped to system coherent address space and accessible to Host using standard write-back semantics. Memory located on a CXL device can either be mapped as HDM or PDM.

Home Agent

Home Agent is the agent on the Host that is responsible for resolving system wide coherency for a given address.

I

IDE

IDE (Integrity & Data Encryption) provides confidentiality, integrity, and replay protection for TLPs (Transaction Layer Packets) . It flexibly supports a variety of use models, while providing broad interoperability. Moreover, it provides efficient encryption/decryption and authentication of TLP packets.

The security model considers threats from physical attacks on Links malicious Extension Devices, etc to examine data intended to be confidential, modify TLP contents, & reorder and/or delete TLPs.

L

M

MCP

MCP (Multi-chip Protocol) is an on-package connection typically used between a CPU die and a companion die.

MLD Device and Port

MLD Device (Multi-Logical Device) is a Pooled Type 3 component that contains one LD reserved for the FM configuration and control and one to sixteen LDs that can be bound to VCSs. Whereas, MLD Port is one that has linked up with a Type 3 Pooled Component. The port is natively bound to an FM-owned PPB inside the switch.

P

T

V

VCS

The VCS (Virtual CXL Switch) includes entities within the physical switch belonging to a single VH. It is identified using the VCS-ID.

VH

VH (Virtual Hierarchy) is everything from the RP down, including the RP, PPB, and Endpoints. It is identified as VH. Hierarchy ID means the same as PCIe.

vPPB

vPPB is a Virtual PCI-to-PCI Bridge inside a CXL switch that is host-owned. A vPPB can be bound to a disconnected, PCIe, CXL 1.1, CXL 2.0 SLD, or LD within an MLD component.