PCI Express Glossary

A glossary of PCI Express terminology and relevant solutions.

A

B

C

D

E

F

H

I

L

M

N

O

P

A

ACS

ACS (Access Control Services) is used to control which devices are allowed to communicate with one another, and thus avoid improper routing of packets. It is specially appropriate with ATS.

Core supports up to 32 Egress Vector. ACS Source Validation & ACS Translation Blocking are implemented in core for downstream ports; all other checks must be implemented in app/switching logic. Well-defined interfaces will enable selectively controlled access between PCI Express Endpoints and between Functions within a multi-function device. Some of the benefits enabled through the use of these controls include:

- Use of ACS to prevent many forms of silent data corruption by preventing PCI Express requests from being routed to a peer Endpoint incorrectly. As an example, if there is silent corruption of an address within a Request header within a PCI Express Switch (such as in an implementation of store-and-forward), the transaction may be routed to a downstream Endpoint or Root Port incorrectly and processed as if it were a valid transaction. This could create a number of problems which might not be detected by the associated application or service.

- ACS can be used to preclude PCI Express Requests from routing between Functions within a multi-function device, preventing data leakage.

- The use of ACS to validate that the Request transactions between two downstream components is allowed. This validation can occur within the intermediate components or within the RC itself.

In systems using ATS, ACS can enable the direct routing of peer-to-peer Memory Requests whose addresses have been correctly Translated, while blocking or redirecting peer-to-peer Memory Requests whose addresses have not been Translated.

AER

AER (Advanced Error Reporting) is an optional PCI Express feature that allows for more enhanced reporting and control of errors than the basic error reporting scheme. AER errors are categorized as either correctable or uncorrectable. A correctable error is recovered by the PCI Express protocol without the need for software intervention and without any risk of data loss. An uncorrectable error can be either fatal or non-fatal. A non-fatal, uncorrectable error results in an unreliable transaction, while a fatal, uncorrectable error causes the link to become unreliable.

The AER driver in the Linux kernel drives the reporting of (and recovery from) these events. In the case of a correctable event, the AER driver simply logs a message that the event was encountered and recovered by hardware. Device drivers can be instrumented to register recovery routines when they are initialized. Should a device experience an uncorrectable error, the AER driver will invoke the appropriate recovery routines in the device driver that controls the affected device. These routines can be used to recover the link for a fatal error, for example.

ARI

For the needs of next generation I/O implementations, a new method to interpret the Device Number and Function Number fields within Routing IDs, Requester IDs, and Completer IDs enabled to increase the number of functions allowed per multi-function device. Allows up to 256 physical functions to be implemented in a device, instead of maximum of 8 normally. ARI (Alternative Routing Interpretation) is required for SR-IOV.

ASPM L0s

ASPM L0s is a mandatory PCI Express native power management mode that allows a device to quickly suspend or resume transmission during periods of inactivity. ASPM L0s does not require any application action.

It can be entered by any type of device and does not require any negotiation with the link partner.

ASPM L0s is per-direction, meaning that either or both RX and TX sides can be in L0s independently.

ASPM L1

ASPM L1 is an optional PCI Express native power management mode that enables the link to be put into low-power mode with the ability to restart quickly during periods of inactivity. ASPM L1 does not require any application action. It involves negotiation between the link partners and can only be initiated by an upstream port (such as an endpoint).

Atomic Operations

New FetchAdd/Swap/CAS TLPs perform multiple operations with a single requests. AtomicOps enable advanced synchronization mechanisms that are particularly useful when there are multiple producers and/or multiple consumers that need to be synchronized in a non-blocking fashion. AtomicOps also enable lock-free statistics counters, for example where a device atomically increments a counter, and host software atomically reads and clears the counter.

ATS

In a virtualized environnement, ATS (Address Translation Service) allows an endpoint to communicate with root complex in order to maintain a translation cache. Endpoint can then offload root complex from time consuming address translation task, resulting in higher performances. Note that address translation cache is not implemented in core as this is very application specific.

Autonomous Bandwidth Change

The application can direct the Core to change link speed and/or width in order to adjust link bandwidth to meet its throughput requirements. Although this is not considered a low-power feature, tuning link bandwidth can save significant power.

Autonomous Link Width/Speed Change

Allows a device to reduce/increase link width and/or speed in order to adjust bandwidth and save power when less bandwidth is necessary.

AXI

AXI is an AMBA’s protocol specification. Advanced eXtensible Interface. AXI, the third generation of AMBA interface defined in the AMBA 3 specification, is targeted at high performance, high clock frequency system designs and includes features that make it suitable for high speed sub-micrometer interconnect. The AXI Layer enables:

- Separate address/control and data phases

- Support for unaligned data transfers using byte strobes

- Burst based transactions with only start address issued

- Issuing of multiple outstanding addresses with out of order responses

- Easy addition of register stages to provide timing closure

Generally, AMBA is the solution used to interconnect blocks in a SoC. This solution enables:

- Facilitation of right-first-time development of embedded microcontroller products with one or more CPUs, GPUs or signal processors

- Technology independence, to allow reuse of IP cores, peripheral and system macrocells across diverse IC processes

- Enablement of modular system design to improve processor independence, and the development of reusable peripheral and system IP libraries

- Minimization of silicon infrastructure while supporting high performance and low power on-chip communication

B

C

CDC

The CDC (Clock Domain Crossing) handles the transition between the PIPE PCI Express clock domain and the User Application and Core clock domains. PCIe logic typically runs at PIPE interface clock frequency. CDC allows part of this logic to run at an application specific clock frequency. Benefits of CDC include:

- Easier integration with application logic: application front-end does not have to run at same frequency as PCIe logic

- Application clock can be lower than PCIe clock, this can ease implementation on slow technologies

- Application clock can be reduced or even turned-off to save power (depending on implementation)

CDC location is an important choice because:

- There can be lots of signals to re-synchronize

- This can consume logic and add latency

- There can be required relationships between PIPE clock and application clock

- This can limit usability of CDC

- There can be side effects inside PCIe logic

A CDC Layer is required to handle different clock domain transitions. The CDC Layer consists of:

- The Receive Buffer, which stores the received TLPs to forward to the user back-end interface

- The Retry Buffer, which stores the TLPs to transmit on the PCI Express link

- A set of registers, which resynchronize information between the PIPE PCI Express clock and the PCI Express Core clock domains

CLKREQ#

Optional side-band pin present in some form factors, which enables power saving. It is used by the Clock Power Management and L1 PM substates with CLKREQ# features. Note that only one of these power saving techniques can be enabled at once.

Clock Gating

Techniques used to reduce power consumption when some blocks of a circuit are not in use. Clock gating enables dynamic power reduction by turning the clock off: flip-flop switching power consumption then goes to zero. Only leakage currents remain.

Crosslink

Allows devices of same port type (2 upstream or 2 downstream ports) to train link together. At its inception, the crosslink was the only option in the PCIe specification that began to address the problem of interconnecting host domains. The crosslink is a physical layer option that works around the need for a defined upstream/downstream direction in the link training process.

Cut-Through

Cut-Through allows TLPs to be forwarded to their destination interface before they are completely stored in the Rx/Tx buffer. This enables significant latency reductions for large TLPs. as opposed to Store and Forward where TLPs must stored completely in Rx/Tx buffer before they can be forwarded to destination interface.

D

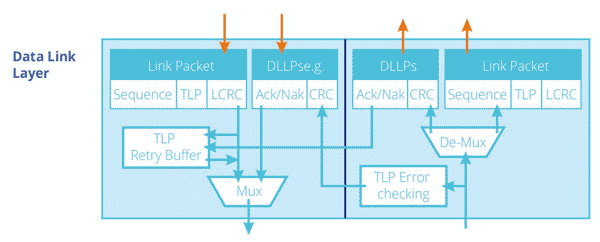

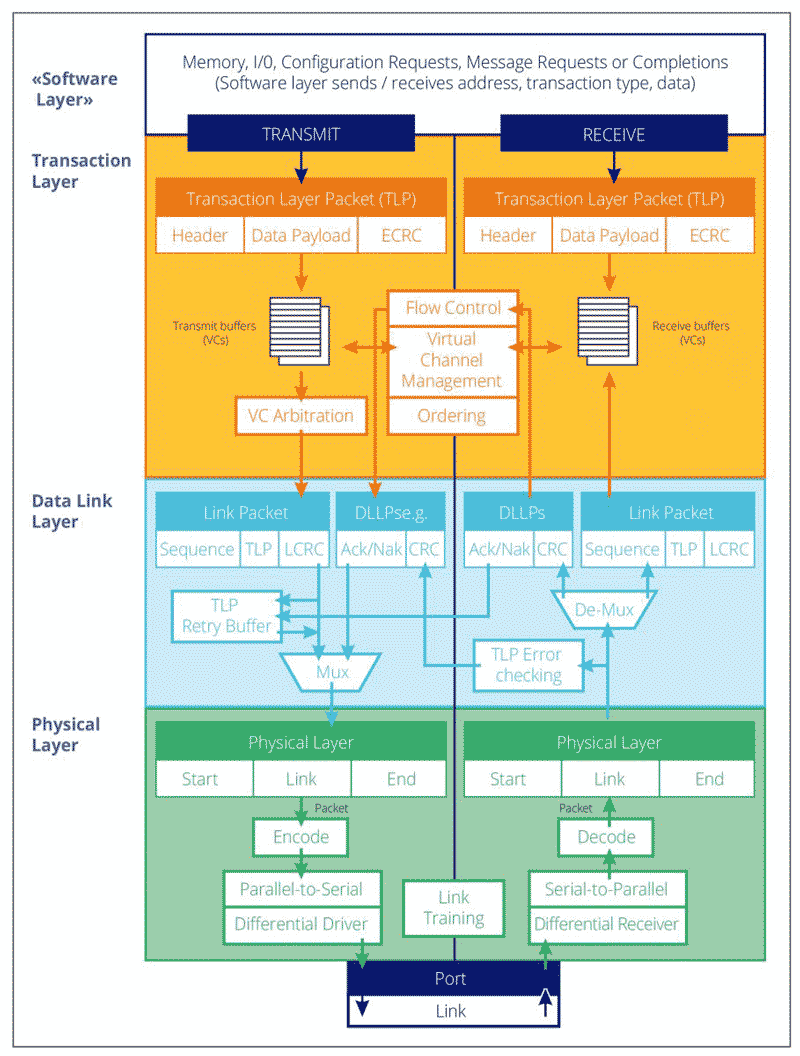

Data Link Layer

The Data Link Layer manages communication at the link level between the two connected PCIe components in order to ensure the correct transmission of packets. Its main roles are: generation and check of the CRC and Sequence Number of the TLP, initialization and update of flow control credits, generation of ACK/NAK, and the management of the Receive and Retry Buffers.

Deskew

The Deskew block in Rambus IP is a module located in Physical Layer that realigns data between lanes (misalignment can be due to lane-lane skew) and then identifies and sorts the packets according to type:

- PLP (Physical Layer Packets) are discarded

- DLLP (Data Link Layer Packets) are forwarded to the DLLP Decode block

- TLP (Transaction Layer Packets) are forwarded to the TLP Decode block

- The Deskew block is also responsible for detecting and reporting framing errors to the LTSSM and Configuration blocks

Device Readiness Notification

Allows a device to send a message to system to indicate when it is ready to accept first config access without sucessful status. Works with standard VDM; requires an extended capability for downstream ports. This feature helps avoid the long, architected, fixed delays following various forms of reset before software is permitted to perform its first Configuration Request. These delays can be very large:

- 1 second if Configuration Retry Status (CRS) is not used

- 100ms for most cases if CRS is used

- 10ms “minimum recovery time” when a Function is programmed from D3hot to D0

In addition, we avoid the complexity of using the existing CRS mechanism, which potentially requires polling periodically up to 1 second following reset by providing an explicit readiness indication.

DLCMSM

The DLCMSM (Data Link Control and Management State Machine) block implements the Data Link Control and Management State Machine. This state machine initializes the Data Link Layer to “dl_up” status, after having initialized Flow Control credits for Virtual Channel 0.

In order to do this, the DLCMSM:

- Is informed by the DLLP Decode block of the reception of Initialization Flow Control packets

- Orders the DLLP Encode block to transmit Initialization Flow Control packets

DLLP

Data Link Layer Packet. The DLLP Decode block checks and decodes the following types of DLLP:

- Initialization Flow Control DLLP: the DLLP Decode block informs the DLCMSM block of its reception and content

- Ack/Nak DLLP: the DLLP Decode block informs the TxSeqNum and CRC Generation of its reception and content so that the TLP contained in the Retry Buffer can be discarded or replayed

- Update Flow Control DLLP: the DLLP Decode block reports its content to the Tx Flow Control Credit block

- Power Management DLLP: the DLLP Decode block informs the Configuration block of its reception and content

The DLLP Encode block encodes the following different types of DLLP (Data Link Layer Packets):

- Initialization Flow Control DLLPs; when a request is received from the DLCMSM block

- High Priority Ack/Nak DLLPs; when the RxSeqNum and CRC Checking block reports that an incorrect TLP has been received, or when no Ack DLLP has been sent during a predefined time slot

- High Priority Update Flow Control DLLPs; based on the available Flow Control Credits reported by the Rx Flow Control Credit block

Power Management DLLPs; when a request is received from the Configuration block - Low Priority Update Flow Control DLLPs; based on the available Flow Control Credits reported by the Rx Flow Control Credit block

- Low Priority Ack/Nak DLLPs; based on the information reported by the RxSeqNum and CRC Checking block

- The encoded DLLPs are transmitted to the TxAlign and LTSTX block

If one or more DLLP is not acknowledged by the TxAlign and LTSTX block during a symbol time, the DLLP Encode block can change the DLLP content and its priority level to ensure that it always transmits the DLLP with the highest priority to the TxAlign and LTSTX block.

DMA

DMA (Direct Memory Access) is an efficient method of transferring data. A DMA engine handles the details of memory transfers to a peripheral on behalf of the processor, off-loading this task. A DMA transfer includes information about:

- Its source, which is defined by its interface, its transfer parameters and its starting address

- Its destination, also defined by its interface, parameters and address

- The DMAdTransfer configuration, which includes the transfer length (up to 4GB, but can be configured as infinite), its controls (start/end/ending criteria/interrupt/reporting request), and its status (error/end status/length)

Downstream Port

The port facing toward PCIe leaf segments (Upstream port or End Point).

DPA

DPA (Dynamic Power Allocation) extends existing PCIe device power management to provide active device power management substate for appropriate devices, while comprehending existing PCIe PM Capabilities including PCI-PM and Power Budgeting. An additional capability is implemented that contains advanced power allocations settings. This feature can be entirely implemented in application.

DPC

DPC (Downstream Port Containment) improves PCIe error containment and allows software to recover from async removal events that are not possible with the existing PCIe specification.

E

ECAM

ECAM (Enhanced Configuration Access Mechanism) is a mechanism developed to allow PCIe to access Configuration Space. The space available per function is 4KB. ECAM enables management of multi-CPU configurations stopping multiple threads trying to access configuration space at the same time. With that mechanism, the configuration space is mapped into memory addresses. This allows a single command sequence, since one memory request in the specified address range will generate one configuration request on the bus.

ECC

ECC (Error Correcting Code) is an optional mechanism implemented in some memories that can detect and/or correct some types of data errors that occurred in memory. When ECC is implemented, uncorrectable errors are reported on the RDDERR output of the transmit and receive buffers. ECC allows robust error detection and enables correction of single-bit errors on the fly. It minimizes the impact of transmission errors.

ECRC

ECRC (EndPoint Cyclic Redundancy Check) is an optional PCIe feature that is available when advanced error reporting (AER) is implemented. It protects the contents of a TLP from its source to its ultimate receiver. It is typically generated/checked by the transaction layer. The intented target of an ECRC is the ultimate recipient of the TLP. Checking the LCRC verifies no transmission errors accross a given link, but that gets recalculated for the packet at the egress port of a routing element. To protect against that, the ECRC is carried forward unchanged on its journey between the Requester and Completer. When the target device checks the ECRC, any error possibilities along the way have a high probability of being detected.

Endpoint

An Endpoint is a device that resides at the bottom of the branches of the tree topology and implements a single Upstream Port toward the Root. Devices that were designed for the operation of an older bus like PCI-X but now have a PCIe interface designate themselves as “Legacy PCIe Endpoints” in a configuration register and this topology includes one.

Extended Tag

Initially PCIe allowed a device to use up to 32 tags by default, and up to 256 tags but only if this capability was enabled by the system. This engineering change was later published, now allowing PCIe devices to use 256 tags by default.

External Interrupt Mode

External interrupt mode enables applications to send MSI / INTx messages rather than relying on the Core to do so. This can be useful, for example, if an application needs to maintain order between TLP transmission and interrupt messages.

F

H

I

IDO

Adds a new ordering attribute in TLP headers which can optionally be set to allow relaxed ordering requirements between unrelated traffic (coming from different requesters/completers). IDO (ID-based Ordering) enables the preservation of the producer consumer programming model and helps prevent deadlocks in PCIe-based systems (potentially including bridges to PCI/PCI-X). The producer/consumer model places restrictions on re-ordering of transactions which have implications on performance, especially read-latency.

Internal Error Reporting

Extension to AER that allows internal/application specific error to be reported. Also specifies a mechanism to store multiple headers.

L

L1

L1 is a legacy power state that is automatically entered by an upstream port (such as an endpoint) when its legacy power state is not D0. L1 entry does not require any action from the application; however, the application can request L1 exit.

L1 PM substates with CLKREQ#

Defines three new L1.0/L1.1/L1.2 substates in order to help achieve greater power savings while in L1 or ASPM L1 state. Link partners use CLKREQ# pin to communicate and change substate.

L2

L2 is a legacy power state where the PCIe link is completely turned off and power may be removed. L2 entry must be initiated by a downstream port (such as a rootport).

LCRC

LCRC (Link Cyclic Redundancy Code) is a standard PCIe feature that protects the contents of a TLP across a single PCIe link. It is generated/checked in the data link layer and is not available to the transaction layer and the application. LCRC is an error detection code added to each TLP. The first step in error checking is simply to verify that this code still evaluates correctly at the receiver. Each packet is given a unique incremental Sequence Number as well. Using that Sequence Number, we can also require that TLPs must be successfully received in the same order they were sent. This simple rule makes it easy to detect missing TLPs at the Receiver’s Data Link Layer.

Lightweight Notification

This protocol allows an endpoint to register some cache lines and be informed via message when they are changed. Some of the potential benefits of LN protocol across PCIe include:

- I/O bandwidth and I/O latency reduction: A device that caches data instead of transferring it over PCIe for each access can dramatically reduce I/O latency and consumed I/O bandwidth for some use models. Reducing I/O bandwidth consumption also reduces host memory bandwidth consumption.

- Lightweight signaling: A device using LN protocol with host memory can enable host software to signal the device by updating a cacheline instead of performing programmed I/O (PIO) operations, which often incur high software overheads and introduce synchronization and flow-control issues.

- Dynamic device associations: Virtual machine (VM) guest drivers communicating with a device strictly via host memory structures (with no PIOs) makes it easier for guests to be migrated, and for VM hosts to switch dynamically between using fully virtualized I/O for that device versus using direct I/O for that device.

LTR

LTR (Latency Tolerance Reporting) is a mechanism that enables Endpoints to send information about their service latency requirements for Memory Reads and Writes to the Root Complex such that central platform resources (such as main memory, RC internal interconnects, snoop resources, and other resources associated with the RC) can be power managed without impacting Endpoint functionality and performance. Current platform Power Management (PM) policies guesstimate when devices are idle (e.g. using inactivity timers). Guessing wrong can cause performance issues, or even hardware failures. In the worst case, users/admins will disable PM to allow functionality at the cost of increased platform power consumption.

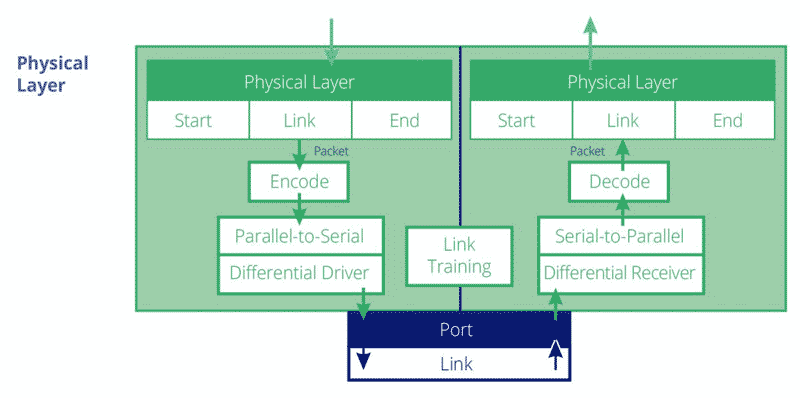

LTSSM

The LTSSM (Link Training and Status State Machine) block checks and memorizes what is received on each lane, determines what should be transmitted on each lane and transitions from one state to another.

Link Training and Status State Machine consists of 11 top-level states: Detect, Polling, Configuration, Recovery, L0, L0s, L1, L2, Hot Reset, Loopback and Disable. These can be grouped into five categories: Link Training states, Re-Training (Recovery) state, Software driven Power Management states, Active-State Power Management states, Other states.

The flow of the LTSSM follows the Link Training states when exiting from any type of Reset: Detect >> Polling >> Configuration >> L0. In L0 state, normal packet transmission / reception is in progress. The Recovery State is used for a variety of reasons, such as changing back from a low-power Link state, like L1, or changing the link Bandwidth. In this state, the Link repeats as much of the training process as needed to handle the matter and returns to L0. Power management software can also place a device into a low-power device state forcing the Link into a lower Power Management Link state (L1or L2). If there are no packets to send for a time, ASPM Software may be allowed to transition the Link into low power ASPM states (L0s or ASPM L1). In addition, software can direct a link to enter some other special states (Disabled, Loopback, Hot Reset.)

M

mPCIe

This ECN allows integration with M-PHY. Requires implementation of a separate physical layer (including LTSSM) for M-PHY interface. Also, a M-PHY model & BFM are necessary for verification. Enables PCI Express (PCIe®) architecture to operate over the MIPI M-PHY® physical layer technology, extending the benefits of the PCIe I/O standard to mobile devices including thin laptops, tablets and smartphones.

The adaptation of PCIe protocols to operate over the M-PHY physical layer provides the Mobile industry with a low-power, scalable solution that enables interoperability and a consistent user experience across multiple devices. The layered architecture of the PCIe I/O technology facilitates the integration of the power-efficient M-PHY with its extensible protocol stack to deliver best-in-class and highly scalable I/O functionality for mobile devices.

MSI

Message Signaled Interrupts (MSI) enable the PCI Express device to deliver interrupts by performing memory write transactions, instead of using the INTx message emulation mechanism.

MSI-X

MSI-X defines a separate optional extension to basic MSI functionality. Compared to MSI, MSI-X supports a larger maximum number of vectors and independent message addresses and data for each vector, specified by a table that resides in Memory Space. However, most of the other characteristics of MSI-X are identical to those of MSI. A function is permitted to implement both MSI and MSI-X, but system software is prohibited from enabling both at the same time. If system software enables both at the same time, the result is undefined.

Multi-Function

A multi-function device is a single device that contains up to 8 PCI Express physical functions (up to 32 with ARI) which are viewed as independent devices by software. This is used to integrate several devices in the same chip, saving both chip/switch cost and power and board space.

Multicast

This optional feature enables devices to send/receive posted TLPs that will be distributed to multiple recipients. This enables potentially large performance gains because the transmitter does not need to transmit one copy of a TLP to each recipient.

N

An Endpoint is a device that resides at the bottom of the branches of the tree topology and implements a single Upstream Port toward the Root. Native PCIe Endpoints are PCIe devices designed from scratch as opposed to adding a PCIe interface to old PCI device designs. Native PCIe Endpoints device are memory mapped devices (MMIO devices).

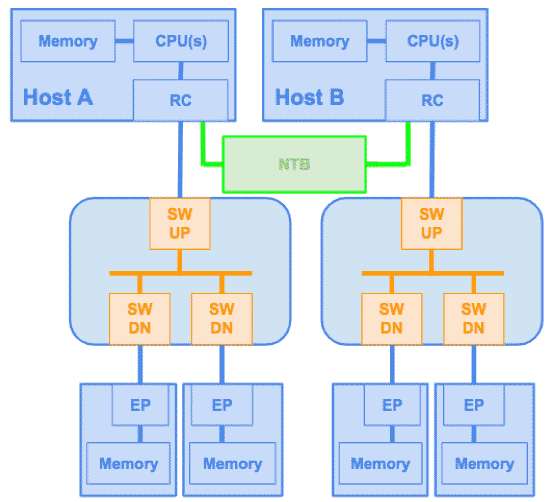

Non-Transparent Bridge (NTB)

A Switch is the PCIe component that allows to interconnect several PCIe devices. That is, it allows to extend the number of devices connected to the Host. PCIe Switch is transparent: all components in the PCIe hierarchy share the same address domain, defined by the (single) Host. In multiprocessor systems, each processor has its own address domain, defined during enumeration, and therefore can’t share the same address domain.

Non-Transparent Bridge (NTB) has two main purposes:

- allow inter-processor communication between different Hosts.

- ensure address domain isolation (by being non-transparent).

Being non-transparent, by definition, implies that one Host can’t enumerate or access directly other Host PCIe hierarchy.

Normal Mode

Normal mode is the default receive interface mode that is suitable for most applications with minimum complexity. In this mode:

- The Receive buffer is completely implemented inside the Core and is large enough to store all received TLPs

- All credit updates are handled internally

- Only TLPs intended for the user application are output on the Receive interface, in the order they are received from the link

Normal Mode with Non-Posted Buffer is similar to normal mode except that an additional buffer is implemented inside the Core that can be used to temporarily store non-posted TLPs. This enables the application to continue receiving posted and completion TLPs even when it is not ready to process non-posted TLPs.

NVMe

Specification that uses PCIe to provide the bandwidth needed for SSD applications.

O

P

Parity

Parity data protection is an optional feature that adds parity bits to data to protect it between the data link layer and the application’s logic.

PASID

PASID (Process Address Space ID) is an optional feature that enables sharing of a single Endpoint device across multiple processes while providing each process a complete 64-bit virtual address space. In practice this feature adds support for a TLP prefix that contains a 20 bit address space that can be added to memory transaction TLPs.

PCI Express Layer

The PCI Express layer consists of:

- a PCIe Controller

- a PCIe Tx/Rx Interface between the Bridge and the PCIe Controller

- a PCIe Configuration Interface to give the Bridge access to the PCIe Config Space

- a PCIe Misc Interface to allow the Bridge to manage Low-Power, Interrupts, etc.

PCIe Switch

A PCI Express switch is a device that allows expansion of PCI Express hierarchy. A switch device comprises one switch upstream, one or more switch downstream ports, and switching logic that routes TLPs between the ports. A PCI Express switch is “transparent”, meaning that software and other devices do not need to be aware of its presence in the hierarchy, and also there is no driver required to operate it.

Peer-to-Peer

In PCI Express, data transfers usually occurs only between the Root Complex and the PCI Express devices. However, peer-to-peer allows a PCI Express device to communicate with another PCI Express device in the hierarchy. In order for this to happen, Root-Ports and Switches must support peer-to-peer, which is optional.

Per-Vector Masking

Per-vector masking is an optional feature set by the Core Variable K_PCICONF that adds Mask Bits and Pending Bits registers to MSIcapability.

The Mask Bits register is programmed by the host and its value can be read by the application via the APB configuration interface, if required.

The behavior of the Pending Bits register depends on the associated interrupt:

- If an MSI is requested by the application and the corresponding message number is masked, then the Core sets the matching pending bit

- If an MSI is requested by the application and the corresponding message number is not masked, then the Core clears the matching pending bit

- If the interrupt request is satisfied while the interrupt source is still masked, then the application must write 1 to the corresponding bit through the APB configuration interface to clear it

PHYMAC

The PHYMAC (Physical Media Access Controller) Layer manages the initialization of the PCI Express link as well as the physical events that occur during normal operation. This layer functions at the PIPE clock frequency. The PHYMAC Layer consists of:

- The LTSSM block, which is the central intelligence of the physical layer

- On the receive side, one Deskew FIFO and eight similar RxLane blocks

- On the transmit side, one TxAlign and LTSTX block and eight TxLane blocks

Physical Functions (PFs)

Physical Functions (PFs) are full-featured PCIe functions; they are discovered, managed, and manipulated like any other PCIe device and PFs have full configuration space. It is possible to configure or control the PCIe device via the PF and in turn, the PF has the complete ability to move data in and out of the device. Each PCI Express device can have from one (1) and up to eight (8) physical PFs. Each PF is independent and is seen by software as a separate PCI Express device, which allows several devices in the same chip and makes software development easier and less costly.

Physical Layer

The Physical Layer is the lowest hierarchical layer for PCIe as shown in the figure below. TLP and DLLP packets are sent from the Data Link Layer to the Physical Layer for transmission over the link. The Physical Layer is divided into 2 languages: the logical one and the electrical one. The Logical Physical Layer contains the digital logic that prepares the packets for serial transmission on the Link and reversing that process for inbound packets. The electrical Physical Layer is the analog interface of the Physical Layer that connects to the Link and consists of differential drivers and receivers for each lane.

PMUX

PMUX (Protocol Multiplexing) enables multiple protocols to share a PCIe Link. It is the mechanism to enable transmission of packets other than TLP/DLLP across the PCIe link.

Power Gating

Technique used to reduce power consumption when some blocks of a circuit are not in use. Power gating shuts off the current to prevent leakage currents.

PTM

PTM (Precision Time Measurement) enables the coordination of timing. It allows the transfer of information across multiple devices with independent local timebases without relying on software to coordinate time among all devices.

R

Receive Buffer

The Rx Buffer received TLPs and DLLPs after the start and end characters have been eliminated. The size of the Receive Bufferdepends on the allocated credits for Posted/Non-Posted/Completion Header and Data, and whether ECRC or Atomic operations are used.

Typically, its size is computed as given below:

- RxBuffer size (Bytes) = (PH + NPH + CPLH + PD + NPD + CPLD) x 16 Bytes

Receive Interface

The Receive Interface allows user applications to receive TLPs from the PCI Express bus.

The Receive Interface can operate in three different modes depending on the type and complexity of the user application:

- Normal Mode

- Normal Mode with Non-Posted Buffer

- RX Stream Mode

Refclk

Allows wider use of SSC (Spread Spectrum Clocking) clocking to ease for cabling application. It requires some changes in PHY (elastic buffer), and minimal changes in core (add some config bits and some changes in SKP OS transmission rules).

Resizable BAR

This feature allows a function to report several possible sizes for its BAR instead of simply not being allocated and left out of the system or forced to report a smaller aperture in order to be allocated. This gives the host some flexibility in case all memory spaces cannot be allocated.

Retimer

Retimers (extension devices) will become important in Gen4 and this feature allows PCI Express upstream devices to optionally detect their presence in order to adjust latency. Core detects a bit in training sets and set a bit in Link Status 2 register accordingly.

Root Complex

Root Complex is not clearly defined in the PCIe spec, but can be defined as the interface between the system CPU and the PCIe topology, with PCIe Ports labeled as Root Ports in the main interface. The use of the term “complex” reflects the large variety of components that can be included in this interface (Processor interface, DRAM interface, etc…).

Root Port

The PCI Express Root Port is a port on the root complex — the portion of the motherboard that contains the host bridge. The host bridge allows the PCI ports to talk to the rest of the computer; this allows components plugged into the PCI Express ports to work with the computer.

RX Stream Mode

In RX stream mode, the Core does not store received TLPs internally but outputs them to the user application immediately. This mode enables greater control on TLP ordering and processing and is particularly suitable for Bridge and Switch designs.

RX Stream Watchdog

Although it is not recommended, it may be necessary in some implementations to run TL_CLK at a frequency lower than the minimum frequency required in RX stream mode.

RX stream watchdog is an optional feature that stops incoming data flow when the Receive buffer is >75% full, and resumes normal operation when the buffer is <25% full. This can avoid RX buffer overflow and, consequently, data corruption, however, this feature is not compliant with the PCIe specification.

RxLane

The RxLane block descrambles and decodes the received PLP and reports the type of OS (Ordered Set) received, its parameters and the number of consecutive OS to the LTSSM.

S

Scatter-Gather

The DMA source and/or destination start address is a pointer to a chained list of page descriptors. Each descriptor contains the address and size of a data block (page), as well as a pointer to the next descriptor block to enable circular buffers.

Simulation Mode

Simulation mode is a special mode where some internal delays and parameters are adjusted in order to accelerate link training and to test certain features within a reasonable simulation time.

SR-IOV

The advent of the Single Root I/O Virtualization (SR-IOV) by the PCI-SIG organization provides a step forward in making it easier to implement virtualization within the PCI bus itself. SR-IOV provides additional definitions to the PCI Express (PCIe) specification to enable multiple Virtual Machines (VMs) to share PCI hardware resources. Using virtualization provides several important benefits to system designers: it makes it possible to run a large number of virtual machines per server, which reduces the need for hardware and the resultant costs of space and power required by hardware devices. It creates the ability to start or stop and add or remove servers independently, increasing flexibility and scalability. It adds the capability to run different operating systems on the same host machine, again reducing the need for discreet hardware In a virtualized environment this feature allows functions of an endpoint to be easily & safely shared among several virtual machines. Up to 64 virtual functions per physical functions are supported.

Switch Port

A Switch Port can be either upstream or downstream, it is always part of a switch and cannot be found in any other PCI Express device type. A switch port contains “Type 1” configuration registers.

T

TLP Prefix

One or more DWORDs are pre-pend to TLP header in order to carry additional information for various purposes (TLP processing hints, PASID, MRIOV, vendor-specific..). TLP prefix support is optional and all devices from the requester to the completer must support this capability to be enabled.

TPH

Adding hints about how the system should handle TLPs targeting memory space can improve latency and traffic congestion. The spec defines TPH (TLP Processus Hint) as providing information about which of several possible cache locations in the system would be the optimal place for a temporary copy of a TLP. Enables a requester or completer (usually Endpoint) to set attributes in a request targetting system memory in order to help host optimize cache management. The mechanisms are used to:

- Ease the process of data residency/allocation within the hierarchy of system cache

- Reduce device memory access latencies and reduce statistical variation in latencies

- Minimize memory & system interconnect BW and its associated power consumption

- In addition, this information enables the Root Complex and Endpoint to optimize their handling of requests by identifying and separating data likely to be reused soon from bulk flows that could monopolize system resources. Providing information about expected data usage patterns allows the best use of system fabric resources such as memory, caches, and system interconnects, reducing possible limits on system performance.

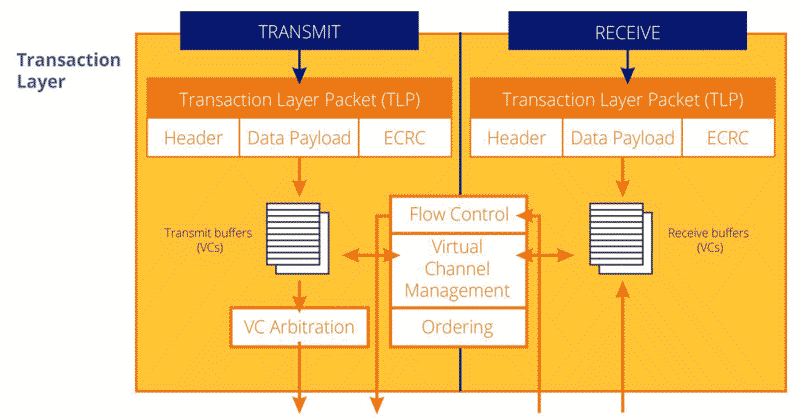

Transaction Layer

The Transaction Layer manages the generation of TLPs from both the Application Layer and the Configuration Space on the transmit side and checks for flow control credits before transmitting the TLPs to the Data Link Layer.

On the receive side, the transaction layer extracts received TLPs from the Receive buffer, checks their format and type, and then routes them to the Configuration Space or to the Application Layer. It also manages the calculation of credits for the Receive buffer. Even though it is not part of the Transaction Layer function, this layer also includes the Configuration Space, which can receive and generate dedicated TLP.

U

Upconfigure

Refers to the ability of a PCI Express device to have its link width increased after initial link training. For example, a PCI Express device can initially enable only one lane so that link trains in x1, and later direct the link to Config state from Recovery and then enable 4 lanes so that links retrains in x4.

Upstream Port

The port facing toward PCIe Root.

V

VC

VC (Virtual Channel) is a mechanism defined by the PCI Express standard for differential bandwidth allocation. Virtual channels have dedicated physical resources (buffering, flow control management, etc.) across the hierarchy. Transactions are associated with one of the supported VCs according to their Traffic Class (TC) attribute through TC-to-VC mapping, specified in the configuration block of the PCIe device. This allows transactions with a higher priority to be mapped to a separate virtual channel, eliminating resource conflicts with low priority traffic.

Virtual Functions (VFs)

Virtual Functions (VFs) are ‘lightweight’ PCIe functions designed solely to move data in and out. Each VF is attached to an underlying PF and each PF can have from zero (0) to one (1) or more VFs. In addition, PFs within the same device can each have a different number of VFs. While VFs, are similar to PFs, they intentionally have a reduced configuration space because they inherit most of their settings from their PF.