Transmitting data at high speeds between a DRAM device and a memory controller requires careful design of IO drivers to ensure that the required electrical signaling levels are achieved. Variations in process, voltage, and temperature can alter the electrical characteristics of the output driver circuitry, resulting in deviations from the desired signaling levels. Additionally, variations in other system elements, such as trace impedance, reference voltage (Vref), and termination voltage (Vterm) can also impact signaling levels. To address these issues, Rambus pioneered the use of Output driver calibration in memory systems to improve communication speeds and provide greater reliability over a wide range of operating conditions.

- Improves data rates and system voltage margin

- Increases DRAM yield

- Compensates for variations in trace impedance and termination voltage

- Improves system reliability over a wide range of operating conditions

What is Output Driver Calibration Technology?

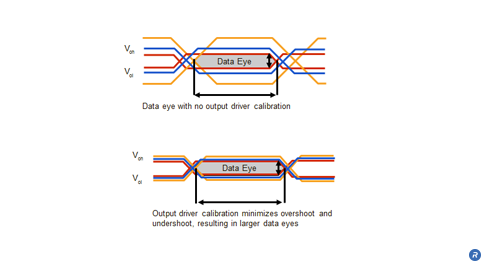

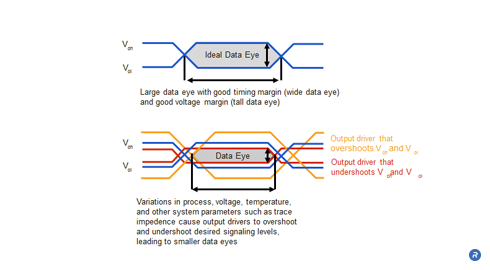

Variations in process, voltage, and temperature can reduce the size of data eyes. Data eyes reveal characteristics of the quality of the signaling environment such as timing and voltage margins. Robust signaling relies on having wide (good timing margin) and tall (good voltage margin) data eyes. Output drivers are designed to drive signals between high and low voltage levels, shown as Voh and Vol in the previous illustration. Variations in process, voltage, temperature, and other factors can cause output drivers to overshoot and/or undershoot the desired signaling voltage levels, resulting in reduced margins that impact signal integrity. Reduced timing margins limit the maximum signaling speed because the window of time over which the data is valid (width of the data eye) is smaller. Reduced voltage margins can require larger IO voltage swings to ensure accurate transmission of data, but such larger swings result in increased IO power and can increase the sensitivity of the system to cross talk. In order to increase signaling rates and reduce IO power, output driver overshoot and undershoot must be managed.

Output driver calibration allows for optimal signaling levels to be established and maintained using adjustable output drive strengths to compensate for variations in process, voltage, and temperature. Calibrating the output drivers during normal operation allows for drive strength adjustments to respond to changes in voltage and temperature which can fluctuate while a system is in use.

Output Driver Calibration uses feedback that is provided to the output driver circuitry to adjust the output impedance of the output driver circuitry, thereby controlling the circuit’s drive strength.in order to achieve optimal signal performance. The driver’s output impedance is compared to a reference resistor RZQ that is placed off the device. The output impedance is then calibrated to be equal to or proportional to the reference precision resistor.

The circuit above depicts how an Output Driver Calibration circuit may be configured. The voltage dropped across the topmost array of resistors is dependent upon the state of the respective transistors in series with those resistors and the value of the RZQ resistance on the line. The states of the transistors in the transistor array are individually controlled by the Drive Strength Register and are set so that the value of Vterm = Vref. The reference voltage, Vref , is representative of the desired output signal level. When the Vterm = Vref condition is achieved, the impedance in the top of the network divider is optimized for the driver. The values used for configuring the transistor array can be stored in the register and may be updated as needed.

The figure above illustrates the effect that Output Driver Calibration has on the magnitude of overshoot and undershoot along the transmission line. The reduction in overshoot and under-shoot results in increased voltage and timing margins.

The reference resistor RZQ and much of the circuitry for Output Driver Calibration can also be utilized for on-die termination ODT calibration.

Who Benefits?

Output driver calibration provides benefits from the device up through the system. By increasing DRAM yield and allowing DRAM output drivers to automatically compensate for process variation, output driver calibration improves margin and device testability, saving design and test time. Output driver calibration also allows board designers to compensate for variations in trace impedance and termination voltage caused by manufacturing and assembly processes. This ability to compensate for manufacturing tolerances of some components enables test specifications to be relaxed and saves component and tester costs.

At the system level, Output driver calibration enables system integrators to use one DRAM in multiple designs that utilize different trace impedances and that operate in different environments. Output driver calibration also increases voltage and timing margins, resulting in higher system reliability over a wider range of operating conditions. In addition, adjustable drive strengths help compensate for variations in temperature which allows system integrators to more effectively manage their system power and thermal budgets, thereby decreasing overall system cost.